Legal Probabilism

Legal probabilism is a research program that relies on probability theory to analyze, model and improve the evaluation of evidence and the process of decision-making in trial proceedings. While the expression “legal probabilism” seems to have been coined by Haack (2014b), the underlying idea can be traced back to the early days of probability theory (see, for example, Bernoulli 1713). Another term that is sometimes encountered in the literature is “trial by mathematics” coined by Tribe (1971). Legal probabilism remains a minority view among legal scholars, but attained greater popularity in the second half of the twentieth century in conjunction with the law and economics movement (Becker 1968; Calabresi 1961; Posner 1973).

To illustrate the range of applications of legal probabilism, consider a stylized case. Alice is charged with murder. Traces at the crime scene, which the perpetrator probably left, genetically match Alice. An eyewitness testifies that Alice ran away from the scene after the crime was committed. Another witness asserts that Alice had previously threatened the victim multiple times. Alice, however, has an alibi. Mark claims he was with her for the entire day. This case raises several questions. How should the evidence be evaluated? How to combine conflicting pieces of evidence, such as the incriminating DNA match and the alibi testimony? If the standard of decision in a murder case is proof beyond a reasonable doubt, how strong should the evidence be to meet this standard?

Legal probabilism is a theoretical framework that helps to address these different questions. Finkelstein and Fairley (1970) gave one of the first systematic analyses of how probability theory, and Bayes’ theorem in particular, can help to evaluate evidence at trial (see Section 1). After the discovery of DNA fingerprinting, many legal probabilists focused on how probability theory could be used to quantify the strength of a DNA match (see Section 2). Recent work in Artificial Intelligence made it possible to use probability theory—in the form of Bayesian networks—to evaluate complex bodies of evidence consisting of multiple components (see Section 3). Following the work of Lempert (1977), likelihood ratios are now commonly used as probabilistic measures of the relevance of the evidence presented at trial (see Section 4). Other authors, starting with seminal papers by Kaplan (1968) and Cullison (1969), deployed probability theory and decision theory to model decision-making and standards of proof at trial (see Section 5).

Legal probabilism is no doubt controversial. Many legal theorists and philosophers, starting with Tribe (1971), leveled several critiques against it. These critiques range from difficulties in assessing the probability of someone’s criminal or civil liability to the dehumanization of trial decisions to misconstruing how the process of evidence evaluation and decision-making takes place in trial proceedings.

A key challenge for legal probabilism—one that has galvanized philosophical attention in recent years—comes from the paradoxes of legal proof or puzzles of naked statistical evidence. Nesson (1979), Cohen (1977) and Thomson (1986) formulated scenarios in which, despite a high probability of guilt or civil liability based on the available evidence, a verdict against the defendant seems unwarranted (see Section 6). Other challenges for legal probabilism include the problem of conjunction and the reference class problem (see Section 7).

Legal probabilism can also be understood as a far reaching research program that aims to analyze—by means of probability theory—the trial system as a whole, including institutions such as the jury system and trial procedure. Some early French probability theorists examined the relationship between jury size, jury voting rules and the risk of convicting an innocent (Condorcet 1785; Laplace 1814; Poisson 1837; for more recent discussions, see Kaye 1980; Nitzan 2009; Suzuki 2015).

At the root of this more radical version of legal probabilism lies the dream of discerning patterns in the behavior of individuals and improving legal institutions (Hacking 1990). Today’s rapid grow of data—paired with machine learning and the pervasiveness of cost-benefit analysis—has rendered this dream more alive than ever before (Ferguson 2020). For a critique of mathematization, quantification and cost-benefit analysis applied to the justice system, see Allen (2013) and Harcourt (2018). This entry will not, however, discuss this far reaching version of legal probabilism.

- 1. Probabilistic Toolkit

- 2. The Strength of Evidence

- 3. Bayesian Networks for Legal Applications

- 4. Relevance

- 5. Standards of Proof

- 6. Naked Statistical Evidence

- 7. Further Objections

- Bibliography

- Academic Tools

- Other Internet Resources

- Related Entries

1. Probabilistic Toolkit

This section begins with a review of the axioms of probability and its interpretations, and then shows how probability theory helps to spot mistakes that people may fall prey to when they assess evidence at trial, such as the prosecutor’s fallacy, the base rate fallacy, and the defense attorney’s fallacy. This section also examines how probabilities are assigned to hypotheses and how hypotheses are formulated at different levels of granularity.

1.1 Probability and its interpretation

Standard probability theory consists of three axioms:

| Axiom | In words | In symbols |

|---|---|---|

| Non-negativity | The probability of any proposition \(A\) is greater than or equal to 0. | \(\Pr(A)\geq 0\) |

| Normality | The probability of any logical tautology is 1. | If \(\models A\), then \(\Pr(A)=1\) |

| Additivity | The probability of the disjunction of two propositions \(A\) and \(B\) is the sum of their respective probabilities, provided the two propositions are logically incompatible. | If \(\models \neg (A \wedge B)\), then \(\Pr(A\vee B)=\Pr(A)+\Pr(B)\) |

An important notion in probability theory is that of conditional probability, that is, the probability of a proposition \(A\) conditional on a proposition \(B\), in symbols, \(\Pr( A\pmid B)\). Although it is sometimes taken as a primitive notion, conditional probability is usually defined as the probability of the conjunction \(\Pr(A \wedge B)\) divided by the probability of the proposition being conditioned on, \(\Pr(B)\), or in other words,

\[\Pr(A \pmid B)= \frac{\Pr(A \wedge B)}{\Pr(B)}\quad \text{ assuming \(\Pr(B)\neq 0\).}\]This notion is crucial in legal applications. The fact-finders at trial might want to know the probability of “The defendant was at the scene when the crime was committed” conditional on “Mrs. Dale asserts she saw the defendant run away from the scene”. Or they might want to know the probability of “The defendant is the source of the traces found at the crime scene” conditional on “The DNA expert asserts that the defendant’s DNA matches the traces at the scene.” In general, the fact-finders are interested in the probability of a given hypothesis \(H\) about what happened conditional on the available evidence \(E\), in symbols, \(\Pr(H \pmid E)\).

Most legal probabilists agree that the probabilities ascribed to statements that are disputed in a trial—such as “The defendant is the source of the crime traces” or “The defendant was at the crime scene when the crime was committed”—should be understood as evidence-based degrees of belief (see, for example, Cullison 1969; Kaye 1979b; Nance 2016). This interpretation addresses the worry that since past events did or did not happen, their probabilities should be 1 or 0. Even if the objective chances are 1 or 0, statements about past events could still be assigned different degrees of belief given the evidence available. In addition, degrees of belief are better suited than frequencies for applications to unrepeatable events, such as actions of individuals, which are often the focus of trial disputes Further engagement with these issues lies beyond the scope of this entry (for a more extensive discussion, see the entry on the interpretations of probability as well as Childers 2013; Gillies 2000; Mellor 2004; Skyrms 1966).

Some worry that, except for obeying the axioms of probability, degrees of belief are in the end assigned in a subjective and arbitrary manner (Allen and Pardo 2019). This worry can be alleviated by noting that degrees of belief should reflect a conscientious assessment of the evidence available which may also include empirical frequencies (see §1.2 below and the examples in ENFSI 2015). In some cases, however, the relevant empirical frequencies will not be available. When this happens, degrees of belief can still be assessed by relying on common sense and experience. Sometimes there will be no need to assign exact probabilities to every statement about the past. In such cases, the relevant probabilities can be expressed approximately with sets of probability measures (Shafer 1976; Walley 1991), probability distributions over parameter values, or intervals (see later in §1.4).

1.2 Probabilistic fallacies

Setting aside the practical difficulties of assigning probabilities to different statements, probability theory is a valuable analytical tool in detecting misinterpretations of the evidence and reasoning fallacies that may otherwise go unnoticed.

1.2.1 Assuming independence

A common error that probability theory helps to identify consists in assuming without justification that two events are independent of one another. A theorem of probability theory states that the probability of the conjunction of two events, \(A\wedge B\), equals the product of the probabilities of the conjuncts, \(A\) and \(B\), that is,

\[\Pr(A \wedge B) = \Pr(A)\times \Pr(B),\]provided \(A\) and \(B\) are independent of one another in the sense that the conditional probability \(\Pr(A \pmid B)\) is the same as the unconditional probability \(\Pr(A)\). More formally, note that

\[\Pr(A \pmid B)=\frac{\Pr(A \wedge B)}{\Pr(B)},\]so

\[\Pr(A \wedge B)=\Pr(A)\times \Pr(B)\]provided \(\Pr(A)=\Pr(A \pmid B)\). The latter equality means that learning about \(B\) does not change one's degree of belief about \(A\).

The trial of Janet and Malcolm Collins, a couple accused of robbery in 1964 Los Angeles, illustrates how the lack of independence between events can be overlooked. The couple was identified based on features at the time considered unusual. The prosecutor called an expert witness, a college mathematician, to the stand, and asked him to consider the following features and assume they had the following probabilities: black man with a beard (1 in 10), man with a mustache (1 in 4), white woman with blond hair (1 out of 3), woman with a ponytail (1 out of 10), interracial couple in a car (1 out of 1000), couple driving a yellow convertible (1 out of 10). The mathematician, correctly, calculated the probability of a random couple displaying all these features on the assumption of independence: 1 in 12 million (assuming the individual probability estimates were correct). Relying on this argument, the jury convicted the couple. If those features are so rare in Los Angeles and the robbers had them—the jury must have reasoned—the Collins must be the robbers.

The conviction was later reversed by the Supreme Court of California in People v. Collins (68 Cal.2d 319, 1968). The Court pointed out the mistake of assuming that multiplying the probabilities of each feature would give the probability of their joint occurrence. This assumption holds only if the features in question are probabilistically independent. But this is not the case since the occurrence of, say, the feature “man with a beard” might very well correlate with the feature “man with a mustache”. The same correlation might hold for the features “white woman with blond hair” and “woman with a ponytail”. Besides the lack of independence, another problem is the fact that the probabilities associated with each feature were not obtained by any reliable method.

The British case R. v. Clark (EWCA Crim 54, 2000) is another example of how the lack of independence between events can be easily overlooked. Sally Clark had two sons. Her first son died in 1996 and her second son died in similar circumstances a few years later in 1998. They both died within a few weeks after birth. Could it just be a coincidence? At trial, the paediatrician Roy Meadow testified that the probability that a child from an affluent family such as the Clark’s would die of Sudden Infant Death Syndrome (SIDS) was 1 in 8,543. Assuming that the two deaths were independent events, Meadow calculated that the probability of both children dying of SIDS was

\[\frac{1}{8,543}\times \frac{1}{8,543}, \quad \text{which approximately equals} \quad \frac{1}{73\times 10^6}\]or 1 in 73 million. This impressively low number no doubt played a role in the outcome of the case. Sally Clark was convicted of murdering her two infant sons (though the conviction was ultimately reversed on appeal). The \(\slashfrac{1}{(73\times 10^6)}\) figure rests on the assumption of independence. This assumption is seemingly false since environmental or genetic factors may predispose a family to SIDS (for a fuller discussion of this point, see Dawid 2002; Barker 2017; Sesardic 2007).

1.2.2 The prosecutor’s fallacy

Another mistake that people often make while assessing the evidence presented at trial consists in conflating the two directions of conditional probability, \(\Pr(A\pmid B)\) and \(\Pr(B \pmid A)\). For instance, if you toss a die, the probability that the result is 2 given that it is even (which equals \(\slashfrac{1}{3}\)) is different from the probability that the result is even given that it is 2 (which equals 1).

In criminal cases, confusion about the two directions of conditional probability can lead to exaggerating the probability of the prosecutor’s hypothesis. Suppose an expert testifies that the blood found at the crime scene matches the defendant’s and it is 5% probable that a person unrelated to the crime—someone who is not the source of the blood found at the scene—would match by coincidence. Some may be tempted to interpret this statement as saying that the probability that the defendant is not the source of the blood is 5% and thus it is 95% probable that the defendant is the source. This flawed interpretation is known as the prosecutor’s fallacy, sometimes also called the transposition fallacy (Thompson and Schumann 1987). The 5% figure is the conditional probability \(\Pr(\match \pmid \neg \source)\) that, assuming the defendant is not the source of the crime scene blood \((\neg \source),\) he would still match \((\match).\) The 5% figure is not the probability \(\Pr(\neg \source \pmid \match)\) that, if the defendant matches (\(\match\)), he is not the source (\(\neg \source\)). By conflating the two directions and thinking that

\[\Pr(\neg \source \pmid \match)=\Pr(\match \pmid \neg \source)=5\%,\]one is led to erroneously conclude that \(\Pr(\source \pmid \match)=95\%\).

The same conflation occurred in the Collins case discussed earlier. Even if the calculations were correct, the 1 in 12 million probability that a random couple would have the specified characteristics should be interpreted as \(\Pr(\match\pmid \textsf{innocent})\), not as the probability that the Collins were innocent given that they matched the eyewitness description, \(\Pr(\textsf{innocent}\pmid \match)\). Presumably, the jurors convicted the Collins because they thought it was virtually impossible that they were not the robbers. But the 1 in 12 million figure, assuming it is correct, only shows that \(\Pr(\match\pmid \textsf{innocent})\) equals \(\slashfrac{1}{(12\times 10^6)}\), not that \(\Pr(\textsf{innocent}\pmid \match)\) equals \(\slashfrac{1}{(12\times 10^6)}\).

1.2.3 Bayes’ theorem and the base rate fallacy

The relation between the probability of the hypothesis given the evidence, \(\Pr(H \pmid E)\), and the probability of the evidence given the hypothesis, \(\Pr(E \pmid H)\), is captured by Bayes’ theorem:

\[ \Pr(H \pmid E) = \frac{\Pr(E \pmid H)}{\Pr(E)} \times \Pr(H),\quad\text{ assuming } \Pr(E) \neq 0.\]The probability \(\Pr(H)\) is called the prior probability of \(H\) (it is prior to taking evidence \(E\) into account) and \(\Pr(H\pmid E)\) is the posterior probability of \(H\). This terminology is standard, but is slightly misleading because it suggests a temporal ordering which does not have to be there. Next, consider the ratio

\[\frac{\Pr(E \pmid H)}{\Pr(E)},\]sometimes called the Bayes factor.[1] This is the ratio between the probability \(\Pr(E \pmid H)\) of observing evidence \(E\) assuming \(H\) (often called the likelihood) and the probability \(\Pr(E)\) of observing \(E\). By the law of total probability, \(\Pr(E)\) results from adding the probability of observing \(E\) assuming \(H\) and the probability of observing \(E\) assuming \(\neg H\), each weighted by the prior probabilities of \(H\) and \(\neg H\) respectively:

\[\Pr(E)= \Pr(E \pmid H)\times \Pr(H)+\Pr(E \pmid \neg H)\times \Pr(\neg H).\]As is apparent from Bayes’ theorem, multiplying the prior probability by the Bayes factor yields the posterior probability \(\Pr(H \pmid E)\). Other things being equal, the lower the prior probability \(\Pr(H)\), the lower the posterior probability \(\Pr(H \pmid E)\). The base rate fallacy consists in ignoring the effect of the prior probability on the posterior (Kahneman and Tversky 1973). This leads to thinking that the posterior probability of a hypothesis given the evidence is different than it actually is (Koehler 1996).

Consider the blood evidence example discussed previously. By Bayes’ theorem, the two conditional probabilities \(\Pr(\neg \source \pmid \match)\) and \(\Pr(\match \pmid \neg \source)\) are related as follows:

\[ \Pr(\neg \source \pmid \match) = \\ \frac{\Pr(\match \pmid \neg \source)\times \Pr(\neg \source)} {\Pr(\match \pmid \neg \source)\times \Pr(\neg \source) + \Pr(\match \pmid \source)\times \Pr(\source)}. \]Absent any other compelling evidence to the contrary, it should initially be very likely that the defendant, as anyone else, had little to do with the crime. Say, for illustrative purposes, that the prior probability \(\Pr(\neg \source)= .99\) and so \(\Pr(\source)=.01\) (more on how to assess prior probabilities later in §1.4). Next, \(\Pr(\match \pmid \source)\) can be set approximately to \(1\), because if the defendant were the source of the blood at the crime scene, he should match the blood at the scene (setting aside the possibility of false negatives). The expert estimated the probability that someone who is not the source would coincidentally match the blood found at the scene—that is, \(\Pr(\match \pmid \neg \source)\)—as equal to .05. By Bayes’ theorem,

\[ \Pr(\neg \source \pmid \match) = \frac{.05}{(.05 \times .99)+ (1 \times .01)} \times .99 \approx .83.\]The posterior probability that the defendant is the source, \(\Pr(\source\pmid \match)\) is roughly \(1-.83=.17\), much lower than the exaggerated value of .95. This posterior probability would have dropped even lower if the prior probability were lower. A similar analysis applies to the Collins case. If the prior probability of the Collins’s guilt is sufficiently low—say in 1 in 6 million—the posterior guilt probability given the match with the eyewitness description would be roughly .7, much less impressive than

\[\left(1-\frac{1}{12\times 10^6}\right)\approx .9999999.\]1.2.4 Defense attorney’s fallacy

Although base rate information should not be overlooked, paying excessive attention to it and ignoring other evidence leads to the so-called defense attorney’s fallacy. As before, suppose the prosecutor expert testifies that the defendant matches the traces found at the crime scene and there is a 5% probability that a random person, unrelated to the crime, would coincidentally match, so \(\Pr(\match\pmid \neg\source)=.05\). To claim that it is 95% likely that the defendant is the source of the traces, or in symbols, \(\Pr(\source\pmid \textsf{ match})=.95\), would be to commit the prosecutor’s fallacy described earlier. But suppose the defense argues that, since the population in town is 10,000 people, \(5\%\) of them would match by coincidence, that is, \(10,000\times 5\%=500\) people. Since the defendant could have left the traces as any of the other 499 people, he is \(\slashfrac{1}{500}=.2\%\) likely to be the source, a rather unimpressive figure. The match—the defense concludes—is worthless evidence.

This analysis portrays the prosecutor’s case as weaker than it need be. If the investigators narrowed down the set of suspects to, say, 100 people—a potential piece of information the defense ignored—the match would make the defendant 16% likely to be the source. This fact can be verified using Bayes’ theorem (left as an exercise for the reader). Even assuming, as the defense claims, that the pool of suspects comprises as many as 10,000 people, the prior probability that the defendant is the source would be \(\slashfrac{1}{10,000}\), and since the defendant matches the traces, this probability should rise to (approximately) \(\slashfrac{1}{500}\). Given the upward shift in the probability from \(\slashfrac{1}{10,000}\) to \(\slashfrac{1}{500}\), the match should not be considered worthless evidence (more on this later in §2.1).

1.3 Odds version of Bayes’ theorem

The version of Bayes’ theorem considered so far is informationally demanding since the law of total probability—which spells out \(\Pr(E)\), the denominator in the Bayes factor \(\slashfrac{\Pr(E \pmid H)}{\Pr(E)}\)—requires one to consider \(H\) and the catch-all alternative hypothesis \(\neg H\), comprising all possible alternatives to \(H\). A simpler version of Bayes’ theorem is the so-called odds formulation:

\[ \frac{\Pr(H \pmid E)} {\Pr(H' \pmid E)} = \frac{\Pr(E \pmid H)}{\Pr(E \pmid H')} \times \frac{\Pr(H)} {\Pr(H')},\]or in words:

\[\textit{posterior odds} = \textit{likelihood ratio} \times \textit{prior odds}.\]The ratio

\[\frac{\Pr(H)}{\Pr(H')}\]represents the prior odds, where \(H\) and \(H'\) are two competing hypotheses, not necessarily one the complement of the other. The likelihood ratio

\[\frac{\Pr(E \pmid H)}{\Pr(E \pmid H')}\]compares the probability of the same item of evidence \(E\) given the two hypotheses. The posterior odds

\[\frac{\Pr(H \pmid E)}{\Pr(H' \pmid E)}\]compares the probabilities of the hypotheses given the evidence. This ratio is different from the probability \(\Pr(H \pmid E)\) of a specific hypothesis given the evidence.

As an illustration, consider the Sally Clark case (previously discussed in §1.2). The two hypotheses to compare are that Sally Clark’s sons died of natural causes (natural) and that Clark killed them (kill). The evidence available is that the two sons died in similar circumstances one after the other (two deaths). By Bayes’ theorem (in the odds version),

\[\frac{\Pr(\textsf{kill} \pmid \textsf{two deaths})}{\Pr(\textsf{natural} \pmid \textsf{two deaths})} = \frac{\Pr(\textsf{two deaths} \pmid \textsf{kill})}{\Pr(\textsf{two deaths} \pmid \textsf{natural})} \times \frac{\Pr(\textsf{kill})}{\Pr(\textsf{natural})}.\]The likelihood ratio

\[\frac{\Pr(\textsf{two deaths} \pmid \textsf{kill})}{\Pr(\textsf{two deaths} \pmid \textsf{natural})}\]compares the likelihood of the evidence (two deaths) under each hypothesis (kill v. natural). Since under either hypothesis both babies would have died—by natural causes or killing—the ratio should equal to one (Dawid 2002). What about the prior odds

\[\frac{\Pr(\textsf{kill})}{\Pr(\textsf{natural})}?\]Recall the \(\slashfrac{1}{(73\times 10^6)}\) figure given by the pediatrician Roy Meadow. This figure was intended to convey how unlikely it was that two babies would die of natural causes, one after the other. If it is so unlikely that both would die of natural causes—one might reason—it must be likely that they did not actually die of natural causes and quite likely that Clark killed them. But did she?

The prior probability that they died of natural causes should be compared to the prior probability that a mother would kill them. To have a rough idea, suppose that in a mid-size country like the United Kingdom 1 million babies are born every year of whom 100 are murdered by their mothers. So the chance that a mother would kill one baby in a year is 1 in 10,000. What is the chance that the same mother kills two babies? Say we appeal to the (controversial) assumption of independence. On this assumption, the chance that a mother kills two babies equals 1 in 100 million. Assuming independence,

\[\Pr(\textsf{natural})=\frac{1}{73\times10^6}\]and

\[\Pr(\textsf{kill})=\frac{1}{100\times 10^6}.\]This means that the prior odds would equal .73. With a likelihood ratio of one, the posterior odds would also equal .73. On this analysis, that Clark killed her sons would be .73 times less likely than they died of natural causes, or in other words, the natural cause hypothesis is 1.37 times more likely than the hypothesis that Clark killed her sons.

A limitation of this analysis should be kept in mind. The .73 ratio is a measure of the probability of one hypothesis compared to another. From this ratio alone, the posterior probability of the individual hypotheses cannot be deduced. Only if the competing hypotheses \(H\) and \(H'\) are exclusive and exhaustive, say one is the negation of the other, can the posterior probability be derived from the posterior odds

\[PO=\frac{P(H \pmid E)}{ P(H' \pmid E)}\]via the equality

\[\Pr(H\pmid E)= \frac{PO}{1+PO}.\]But the hypotheses kill and natural are not exhaustive, since the two babies could have died in other ways. So the posterior odds

\[\frac{\Pr(\textsf{kill} \pmid \textsf{two deaths})}{\Pr(\textsf{natural} \pmid \textsf{two deaths})}\]cannot be translated into the posterior probabilities of individual hypotheses. (A more sophisticated probabilistic analysis is presented in §3.3.)

1.4 Where do the numbers come from?

In the examples considered so far, probabilities were assigned on the basis of empirical frequencies and expert opinions. For example, an expert testimony that only 5% of people possess a particular blood type was used to set \(\Pr(\match \pmid \neg \source)=.05\). Probabilities were also assigned on the basis of common sense, for example, \(\Pr(\match \pmid \source)\) was set to 1 assuming that if someone is the source of the crime traces, the person must match the crime traces (setting aside the possibility of framing, fabrication of the traces or false negatives in the test results). But probabilities need not always be assigned exact values. Agreeing on an exact value can in fact be extremely difficult, especially for prior probabilities. This is a challenge for legal probabilism (see later in §7.3). One way to circumvent this challenge is to avoid setting exact values and adopt plausible intervals. This method is based on sensitivity analysis, an assessment of how prior probabilities affect other probabilities.

Consider the paternity case State v. Boyd (331 N.W.2d 480, Minn. 1983). Expert witness Dr. Polesky testified that 1,121 unrelated men would have to be randomly selected from the general population before another man could be found with all the appropriate genes to have fathered the child in question. This formulation can be misleading since the expected number of matching DNA profiles need not be the same as the number of matching profiles actually found in a population (the error of thinking otherwise is called the expected value fallacy). On a more careful formulation, the probability that a random person would be a match is \(\slashfrac{1}{1121}\), or in symbols,

\[\Pr(\match\pmid \neg \textsf{father})=\frac{1}{1121}.\]As explained earlier in §1.2.3, this probability cannot be easily translated into the probability that a person whose genetic profile matches is the father, or in symbols, \(\Pr(\textsf{father}\pmid \match)\). The latter should be calculated using Bayes’ theorem:

\[\begin{align} \Pr(\textsf{father}\pmid \match) & = \frac{ \Pr(\match\pmid \textsf{father}) \times \Pr(\textsf{father}) } { \Pr(\match \pmid \textsf{father}) \times \Pr(\textsf{father}) + \Pr(\match \pmid \textsf{not-father}) \times \Pr(\textsf{not-father}) } \end{align}\]In actual practice, the formula to establish paternity is more complicated (Kaiser and Seber, 1983), but for the sake of illustration, we abstract away from this complexity. Suppose the prior probability that the defendant would be the father is as low as .01, or in symbols, \(\Pr(\textsf{father})=.01\). Plugging in this prior probability into Bayes theorem, along with \(\Pr(\textsf{m}\pmid \textsf{father})=1\) and \(\Pr(\textsf{m}\pmid \textsf{not-father})=1/1,121\), gives a probability of paternity \(\Pr(\textsf{father}\pmid \textsf{m})\) that is equal to about .92.

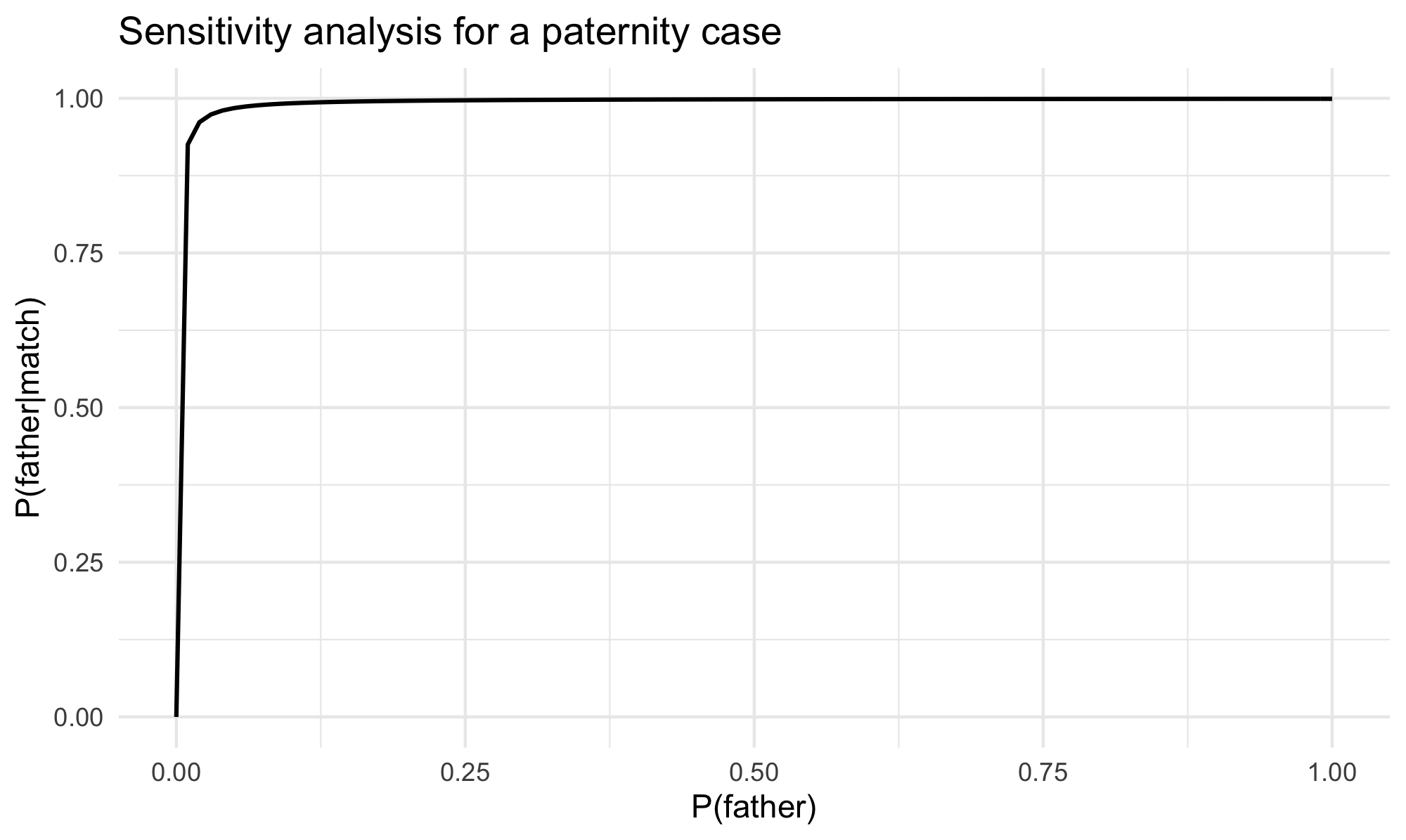

But why take the prior probability \(\Pr(\textsf{father})\) to be .01 and not something else? The idea in sensitivity analysis is to look at a range of plausible probability assignments and investigate the impact of such choices. In legal applications, the key question is whether assignments that are most favorable to the defendant would still return a strong evidentiary case against the defendant. In the case at hand, the expert testimony is so strong that for a wide spectrum of the prior probability \(\Pr(\textsf{father})\), the posterior probability \(\Pr(\textsf{father} \pmid \match)\) remains high, as the plot in figure 1 shows.

Figure 1

The posterior surpasses .9 once the prior is above approximately .008. In a paternity case, given the mother’s testimony and other evidence, it is clear that the probability of fatherhood before the expert testimony is taken into account should be higher than that, no matter what the prior probability should exactly be. Without settling on an exact value, the interval of values above .008 would guarantee a posterior probability of paternity to be at least .9. This is amply sufficient to meet the preponderance standard that governs civil cases such as paternity disputes (on standards of proof, see later in Section 5.)

1.5 Source, activity and offense level hypotheses

Difficulties in assessing probabilities go hand in hand with the choice of the hypotheses of interest. To some approximation, hypotheses can be divided into three levels: offence, activity, and source level hypotheses. At the offence level, the issue is whether the defendant is guilty, as in the statement “Smith is guilty of manslaughter”. At the activity level, hypotheses describe what happened and what those involved did or did not do. An example of activity level hypothesis is “Smith stabbed the victim”. Finally, source level hypotheses describe the source of the traces, such as “The victim left the stains at the scene”, without specifying how the traces got there. Overlooking differences in hypothesis level can lead to serious confusions.

Consider a case in which a DNA match is the primary incriminating evidence. DNA evidence is one of the most widely used forms of quantitative evidence currently available, but it does not work much differently from blood evidence or other forms of trace evidence. In testifying about a DNA match, trial experts will often assess the probability that a random person, unrelated to the crime, would coincidentally match the crime stain profile (Foreman et al. 2003). This is called the Genotype Probability or Random Match Probability, which can be extremely low, in the order of 1 in 100 million or even lower (Donnelly 1995; Kaye and Sensabaugh 2011; Wasserman 2002). It is tempting to equate the random match probability to \(\Pr(\match \pmid \textsf{innocence})\) and together with the prior \(\Pr(\textsf{innocence})\) use Bayes’ theorem to calculate the posterior probability of innocence \(\Pr(\textsf{innocence} \pmid \match)\). This would be a mistake. Applying Bayes’ theorem is of course recommended and helps to avoid the prosecutor’s fallacy, the conflation of \(\Pr(\textsf{innocence} \pmid \match)\) and \(\Pr(\match \pmid \textsf{innocence})\). The problem here lies elsewhere. Equating the random match probability with \(\Pr(\match \pmid \textsf{innocence})\) overlooks the difference between offense, activity and source level hypotheses. A DNA match cannot speak directly to the question of guilt or innocence. Even if the suspect is the source of the genetic material at the scene, the match does not establish that the defendant did visit the scene and came into contact with the victim, and even if they did, it does not follow that they committed the crime they were accused of.

Few forms of evidence can speak directly to offense level hypotheses. Circumstantial evidence that is more amenable to a probabilistic quantification, such as DNA matches and other trace evidence, does not. Eyewitness testimony may speak more directly to offense level hypotheses, but it is also less easily amenable to a probabilistic quantification (see however Friedman 1987, recent results by Wixted and Wells 2017, and a survey of related issues by Urbaniak et al. 2020). This makes it difficult to assign probabilities to offense level hypotheses. Moving beyond source level hypotheses requires a close collaboration between scientists, investigators and attorneys (see Cook et al. 1998 for a discussion).

2. The Strength of Evidence

The posterior probability of a hypothesis given the evidence should not be confused with the strength (or probative value, weight) of the evidence in favor of the hypothesis. The strength of an item of evidence reflects its impact on the probability of the hypothesis. Suppose the prior probability of \(H\) is extremely low, say \(\Pr(H)=.001\), but taking evidence \(E\) into account brings this probability up to 35%, that is, \(\Pr(H \pmid E)=.35\). This is a dramatic upward shift. Even though the posterior probability of \(H\) given \(E\) is not high, \(E\) strongly favors \(H\). This section examines how probability theory helps to assess the strength of evidence.

2.1 Bayes factor v. likelihood ratio

The notion of strength of evidence—as distinct from prior and posterior probability—can be captured formally in a number of different ways (for a comprehensive discussion, see the entry on confirmation theory). One measure of the strength of evidence is the Bayes factor

\[\frac{\Pr(E \pmid H)}{\Pr(E)}\](already discussed in §1.2.3). This is an intuitively plausible measure of evidential strength. Note that by Bayes’ theorem

\[ \Pr(H \pmid E) = \textit{BayesFactor}(H, E) \times \Pr(H),\]and thus the Bayes factor is greater than one if and only if the posterior probability \(\Pr(H \pmid E)\) is higher than the prior probability \(\Pr(H)\). The greater the Bayes factor (for values above one), the greater the upward shift from prior to posterior probability, the more strongly \(E\) positively supports \(H\). Conversely, the smaller the Bayes factor (for values below one), the greater the downward shift from prior to posterior probability, the more strongly \(E\) negatively supports \(H\). If \(\Pr(H)=\Pr(H\pmid E)\) the evidence has no impact, upwards or downwards, on the prior probability of \(H\).

The Bayes factor is an absolute measure of evidence \(E\)’s support toward \(H\) since it compares the probability of \(E\) under hypothesis \(H\) against the probability of \(E\) in general. The denominator is calculated following the law of total probability:

\[\Pr(E)= \Pr(E \pmid H) \Pr(H)+\Pr(E \pmid \neg H) \Pr(\neg H).\]The catch-all alternative hypothesis \(\neg H\) can be replaced by a more fine-grained set of alternatives, say \(H_1, H_2, \dots H_k\), provided \(H\) and its alternatives cover the entire space of possibilities. The law of total probability would then read:

\[\Pr(E) = \Pr(E\pmid H)\Pr(H) +\sum_{i=1}^k \Pr(E\pmid H_i)\Pr(H_i).\]Instead of Bayes factor, the strength of evidence can be assessed by means of the likelihood ratio, a comparative measure of whether evidence \(E\) supports a hypothesis \(H\) more than a competing hypothesis \(H'\), in symbols,

\[\frac{\Pr(E \pmid H)}{\Pr(E \pmid H')}.\]An expert, for instance, may testify that the blood-staining on the jacket of the defendant is ten times more likely to be seen if the wearer of the jacket hit the victim (hypothesis \(H\)) rather than if he did not (hypothesis \(H'\)) (Aitken, Roberts, & Jackson 2010, 38). If the evidence supports \(H\) more than \(H'\), the ratio would be above one, and if the evidence supports \(H'\) more than \(H\), the ratio would be below one. The greater the likelihood ratio (for values above one), the stronger the evidence in favor of \(H\) as contrasted with \(H'\). The smaller the likelihood ratio (for values below one), the stronger the evidence in favor of the competing hypothesis \(H'\) as contrasted with \(H\).

The relationship between likelihood ratio

\[\frac{\Pr(E \pmid H)}{\Pr(E \pmid H')}\]and posterior odds

\[\frac{\Pr(H \pmid E)}{\Pr(H' \pmid E)}\]is apparent in the odds version of Bayes’ theorem (see earlier in §1.3). If the likelihood ratio is greater (lower) than one, the posterior odds will be greater (lower) than the prior odds of \(H\). The likelihood ratio, then, is a measure of the upward or downward impact of the evidence on the odds of two hypotheses \(H\) and \(H'\).

A competitor of the likelihood ratio as a measure of evidentiary strength is an even simpler notion, the probability \(\Pr(E \pmid H)\). It is tempting to think that, whenever \(\Pr(E \pmid H)\) is low, \(E\) should be strong evidence against \(H\). Consider an example by Triggs and Buckleton (2014). In a child abuse case, the prosecutor offers evidence that a couple’s child rocks (a movement pattern) and that only 3% of non-abused children rock, \(\Pr(\textsf{child rocks} \pmid \textsf{no abuse})=.03\). If it is unlikely that a non-abused child would rock, the fact that this child rocks might seem strong evidence of abuse. But this reading of the 3% figure is mistaken. It could well be that 3% of abused children rock, \(\Pr(\textsf{child rocks} \pmid \textsf{abuse})=.03\). If rocking is unlikely under either hypothesis—which means the likelihood ratio

\[\frac{\Pr(\textsf{child rocks} \pmid \textsf{abuse})}{\Pr(\textsf{child rocks} \pmid \textsf{no abuse})}\]equals one—rocking cannot count as evidence of abuse. In order to avoid exaggerations of the evidence, it is best to assess it by means of the likelihood ratio rather the probability of the evidence given a hypothesis (ENFSI 2015; Royall 1997).

2.2 Cold-hit DNA matches

To better understand likelihood ratios, it is instructive to look at DNA evidence as a case study, focusing in particular on cold-hit matches. DNA evidence may be used to corroborate other evidence in a case or as the primary incriminating evidence. Suppose different investigative leads point to an individual, Mark Smith, as the perpetrator. Suppose the investigators also find several traces at the crime scene left by the perpetrator, and laboratory analyses show that the genetic profile associated with the traces matches Smith. In this scenario, the DNA match corroborates the other evidence against Smith. In contrast, suppose no investigative lead allowed the police to identify a suspect. The only evidence consists of the traces found at the crime scene. The police run the genetic profile associated with the traces through a database of profiles and find a match, a so-called cold-hit. Since in cold-hit cases there is no other evidence, cold-hit matches are the primary item of evidence against the defendant. Some scholars believe that this circumstance weakens the evidentiary value of the match. Others disagree. We now examine some of the main arguments in this debate.

2.2.1 Random match v. database match

Suppose an expert testifies that the crime traces genetically match the defendant and that the random match probability is extremely low, say 1 in 100 million. The random match probability—often interpreted as the probability that someone who is not the source would coincidentally match, \(\Pr(\match \pmid \neg \source)\)—is a common measure of the strength of a DNA match. The lower this probability, the more strongly incriminating the match. Strictly speaking, a match is strong evidence that the defendant is the source only if the likelihood ratio

\[\frac{\Pr(\textsf{DNA match} \pmid \source)}{\Pr(\textsf{DNA match} \pmid \neg \source)}\]is significantly greater than one. In practice, however, when the random match probability is low—that is, \(\Pr(\match \pmid \neg \source)\) is low—the likelihood ratio should be significantly above one because the probability that the individual who is the source would match, \(\Pr(\match \pmid \source)\), should be high so long as the test has a low false negative rate. For practical purposes, then, a low random match probability does count as strong incriminating evidence.

When it comes to cold-hit matches, however, further complications arise. The Puckett case can serve as an illustration. In 2008, John Puckett was identified through a database search of 338,000 profiles. He was the only individual in the database who matched the traces collected from Diana Sylvester, a victim of rape in 1972. The expert witness testified that Puckett’s genetic profile should occur randomly among Caucasian men with a frequency of 1 in 1.1 million. This would seem strong evidence against Puckett’s innocence. But the DNA expert for the defense, Bicka Barlow, pointed out that besides the cold-hit match the evidence against Puckett was slim. Barlow argued that the correct assessment of the cold-hit match required to multiply 1/1.1 million by the size of the database. Call the result of this multiplication the database match probability. Multiplying 1/1.1 million by 338,000 yields a database match probability of roughly 1/3, an unimpressive figure. If someone in the database could match with a probability of 1/3, the cold-hit match should not count as strong evidence against Puckett. This was Barlow’s argument.

Barlow followed a 1996 report by the National Research Council, often referred to as NRC II (National Research Council 1996). The report recommended that in cold-hit cases the random match probability should be multiplied by the size of the database. This correction was meant to guard against the heightened risk of mistaken matches for the innocent people in the database. NRC II used an analogy. If you toss several different coins at once and all show heads on the first attempt, this outcome seems strong evidence that the coins are biased. If, however, you repeat this experiment sufficiently many times, it is almost certain that at some point all coins will land heads. This outcome should not count as evidence that the coins are biased. According to NRC II, repeating the coin toss experiment multiple times is analogous to trying to find a match by searching through a database of profiles. As the size of the database increases, it is more likely that someone in the database who had nothing to do with the crime would match.

The aptness of the analogy has been challenged (Donnelly and Friedman 1999). Searching a larger database no doubt increases the probability of finding a match at some point. But the relevant proposition here is not “At least one of the profiles in the database would randomly match the crime sample”. Rather, the relevant proposition is “The profile of the defendant on trial would randomly match the crime sample”. The probability of finding a match between the defendant and the crime sample does not increase because other people in the database are tested. In fact, suppose everyone in the world is recorded in the database. A unique cold-hit match would be extremely strong evidence of guilt since everybody would be excluded as a suspect except one matching individual. Instead, if the random match probability were multiplied by the size of the database, the probative value of the match should be quite low. This is counter-intuitive.

Another analogy is sometimes used to argue that the evidentiary value of a cold-hit match should be weakened. The analogy is between searching for a match in a database and multiple hypothesis testing, an objectionable research practice. In classical hypothesis testing, if the probability of type I error in a single test of a hypothesis is .05, this probability will increase by testing the same hypothesis multiple times. The database match probability—the argument goes—would correct for the increased risk of type I error. However, as Balding (2002, 2005) points out, multiple testing consists in testing the same hypothesis multiple times against new evidence. In cold-hit cases, multiple hypotheses—each concerning a different individual in the database—are tested only once and then excluded if a negative match occurs. From this perspective, the hypothesis that the defendant is the source is one of the many hypotheses subject to testing. The cold-hit match supports that hypothesis and rules out the others.

2.2.2 The likelihood ratio of cold-hit matches

A more principled way to assess cold-hit matches is based on the likelihood ratio. The proposal draws from the literature on the so-called island problem, studied by Eggleston (1978), Dawid (1994), and Dawid and Mortera (1996). Let the prosecutor’s hypothesis \(H_p\) be “The suspect is the source of the crime traces” and the defense hypothesis \(H_d\) be “The suspect is not the source of the crime traces”. Let \(M\) be the DNA match between the crime stain and the suspect (included in the database) and \(D\) the information that no one among the profiles in the database, except for the suspect, matches the crime stain. The likelihood ratio associated with \(M\) and \(D\) should be (Balding and Donnelly 1996; Taroni et al. 2014):

\[V = \frac{\Pr(M,D\pmid H_p)}{\Pr(M,D\pmid H_d)}.\]Since \(\Pr(A\wedge B)=\Pr(A\pmid B)\times \Pr(B)\), for any statement \(A\) and \(B\), this ratio can be written as

\[ V = \frac{\Pr(M\pmid H_p,D)}{\Pr(M\pmid H_d,D)} \times \frac{\Pr(D\pmid H_p)}{\Pr(D\pmid H_d)}.\]The first ratio

\[\frac{\Pr(M\pmid H_p,D)}{\Pr(M\pmid H_d,D)}\]is roughly \(\slashfrac{1}{\gamma}\), where \(\gamma\) is the random match probability. The second ratio

\[\frac{\Pr(D\pmid H_p)}{\Pr(D\pmid H_d)}\]is the database search ratio, defined as follows (for details, see Balding and Donnelly 1996; Taroni et al. 2014):

\[ \frac{\Pr(D\pmid H_p)}{\Pr(D\pmid H_d)} = \frac{1}{1-\varphi},\]where \(\Pr(S \pmid H_d)=\varphi\) and \(S\) stands for the proposition that someone in the database is the source of the crime traces. Donnelly and Friedman (1999) derived a similar formula for this ratio. As the database gets larger, \(\varphi\) increases and the database search ratio increases. This ratio equals one only if no one in the database could be the source.

Since the likelihood ratio \(V\) of the cold-hit match results by multiplying the likelihood ratio of the DNA match and the database search ratio, \(V\) will always be greater than the mere likelihood ratio of the match (except for the unrealistic case in which \(\varphi=0\)) . Thus, a cold-hit DNA match should count as stronger evidence than a DNA match of a previously identified suspect. Dawid and Mortera (1996) study different database search strategies and consider the possibility that information about the match is itself uncertain, but the general point remains. Under reasonable assumptions, ignoring the database search would give a conservative assessment of the evidentiary strength of the cold-hit match.

There is intuitive resistance in basing a conviction on a cold-hit match, but this resistance is less strong in case of an ordinary match (more on this later in Section 6). This preference for convictions based on an ordinary DNA match seems in tension with the claim that a cold-hit match is stronger evidence of guilt than an ordinary match. There is a way to reconcile both sides, however. The key is to keep in mind that the evidentiary strength—measured by the likelihood ratio—should not be confused with the posterior probability of guilt given the evidence. If the cold-hit match is the only evidence of guilt, the posterior probability of guilt may well be lower compared to cases in which other evidence, such as investigative leads, supplements the DNA match. This lower posterior probability would justify the intuitive resistance towards convictions in cold-hit cases despite the stronger probative value of the cold-hit match.

2.3 Choosing competing hypotheses

The likelihood ratio is helpful for assessing the strength of the evidence, as the preceding discussion about cold-hit matches shows. One major difficulty, however, is the choice of the hypotheses \(H\) and \(H'\) that should be compared. The hypotheses should compete with one another—say, in a criminal trial, \(H\) is the hypothesis put forward by the prosecution and \(H'\) is the hypothesis put forward by the defense. But this constraint leaves open the possibility for manipulations and misinterpretations of the evidence. Let us examine some of the main controversies in the literature on this topic.

2.3.1 Ad hoc hypotheses and Barry George

Consider again a stylized DNA evidence case. Suppose the prosecutor puts forward the hypothesis that the suspect left the traces found at the crime scene. This hypothesis is well supported by laboratory analyses showing that the defendant genetically matches the traces. The defense, however, responds by putting forward the following ad hoc hypothesis: “The crime stain was left by some unknown person who happened to have the same genotype as the suspect”. Since the probability of the DNA match given either hypothesis is 1, the likelihood ratio equals 1 (Evett, Jackson, and Lambert 2000). The problem generalizes. For any item of evidence and any given prosecutor’s hypothesis \(H\), there is an ad hoc competing hypothesis \(H^*\) such that

\[\frac{\Pr(E \pmid H)}{\Pr(E \pmid H^*)}=1.\]Hypothesis \(H^*\) is a just-so hypothesis, one that is selected only because it explains the evidence just as well as hypothesis \(H\) does (Mayo 2018: 30–55). If no further constraints are placed on the choice of the competing hypotheses—it would seem—no evidence could ever incriminate a defendant.

Judges and jurors will often recognize ad hoc hypotheses for what they are—artificial theories that should not be taken seriously. Perhaps, the common sense of the participants in a trial will suffice to constrain the choice of hypotheses in the right way. But real cases are complex, and it is not always obvious whether a choice of competing hypotheses, which are not obviously ad hoc, is legitimate or not.

A notable example is R. v. George (2007 EWCA Crim 2722). Barry George was accused of murdering British journalist and TV celebrity Jill Dando. A single particle of firearm residue was found one year later in George’s coat pocket and it matched the residue from the crime scene. This was the key incriminating evidence against him. George was convicted, and his first appeal was unsuccessful. After the first appeal, Ian Evett from the Forensic Science Service worried that the evidence had not been properly assessed at trial. The jurors were presented with the conditional probability of finding the firearm residue in George’s coat given the defense hypothesis that George did not fire the gun. This probability was estimated to be quite low. But the jurors were not presented with the conditional probability of finding the same evidence given the prosecutor’s hypothesis that George did fire the gun that shot Dando. An expert witness, Mr. Keeley, was asked to provide both conditional probabilities and estimated them to be \(\slashfrac{1}{100}\), which indicated that the firearm residue had no probative value. George appealed again in 2007, and relying on Keely’s estimates, won the appeal.

A study of the trial transcript shows that Keeley’s choice of hypotheses lacked coherence and the likelihood ratio based on them was therefore meaningless. On one occasion, Keeley compared the hypothesis that the particle found in George’s pocket came from a gun fired by George himself, and the alternative hypothesis that the particle came from another source. On another occasion, Keeley took the prosecutor’s hypothesis to be “The particle found in George’s pocket came from the gun that killed Dando.” But the conditional probability of the evidence given this hypothesis should not be low. It should actually be one. The most charitable reading of the trial transcript suggests that the expert had in mind the hypotheses “George was the man who shot Dando” and “The integrity of George’s coat was corrupted”. But Keeley gave no justification for why these hypotheses should be compared in the likelihood ratio (see Fenton et al. 2014 for details).

A related complication is that competing hypotheses can concern any factual dispute, from minute details such as whether the cloth used to suffocate the victim was red or blue, to ultimate questions such as whether the defendant stabbed the victim. The likelihood ratio varies across hypotheses formulated at different levels of granularity: offense, activity and source level hypotheses (on this distinction, see earlier in §1.5). It is even possible that, at the source level, the likelihood ratio favors one side, say the prosecution, but at the offence level, the likelihood ratio favors the other side, say the defense, even though the hypotheses at the two levels are quite similar (Fenton, et al. 2014).

2.3.2 Exclusive and exhaustive?

The confusion in the Barry George case is attributable to the absence of clear rules for choosing the hypotheses in the likelihood ratio. One such rule could be: pick competing hypotheses that are exclusive (they cannot be both true) and exhaustive (they cannot be both false). In this way, the parties would not be able to pick ad hoc hypotheses and skew the assessment of the evidence in their own favor.

There are other good reasons why the hypotheses in the likelihood ratio should be exclusive and exhaustive. For if they are not, the likelihood ratio can deliver counterintuitive results. To see why, first consider hypotheses that are not mutually exclusive. Let \(H_p\) stand for “The defendant is guilty” and \(H_d\) for “The defendant was not at the crime scene”. Let \(E\) stand for

Ten minutes before the crime took place the defendant—seen at a different location—was overheard on the phone saying go ahead and kill him.

The evidence positively supports each hypothesis, yet it is conceivable that the likelihood ratio should equal one in this context. Further, consider two competing hypotheses that are not exhaustive. Suppose Fred and Bill attempted to rob a man. The victim resisted, was struck on the head and died. Say \(H_p\) stand for “Fred struck the fatal blow” and \(H_d\) stand for “Bill struck the fatal blow”. The hypotheses are not exhaustive because a third hypothesis is “The man did not die from the blow”. Suppose \(E\) is the information that the victim had a heart attack six months earlier. The likelihood ratio

\[\frac{\Pr(E \pmid H_p)}{\Pr(E \pmid H_d)}\]equals one since

\[\Pr(E\pmid H_p)=\Pr(E\pmid H_d).\]Yet \(E\) reduces the probability of both \(H_p\) and \(H_d\). So, in this case, the evidence negatively supports each hypothesis, contrary to what the likelihood ratio suggests.

But relying on exclusive and exhaustive hypotheses is not without complications either. Consider an expert who decides to formulate the defense hypothesis by negating the prosecution hypothesis, say, “the defendant did not hit the victim in the head”. This choice of defense hypothesis can be unhelpful in assessing the evidence. What is the probability that the suspect would carry such and such blood stain if he did not hit the victim in the head? This depends on whether he was present at the scene, what he was doing at the time and many other circumstances. As Evett, Jackson, and Lambert (2000) point out, the choice of a particular hypothesis to be used in the evaluation of the strength of the evidence will depend on contextual factors. More often than not, the hypotheses chosen will not be mutually exclusive. Comparing exclusive and exhaustive hypotheses can also be unhelpful for jurors or judges making a decision at trial. In a paternity case, for example, the expert should not compare the hypotheses “The accused is the father of the child” and its negation, but rather, “The accused is the father of the child” and “The father of the child is a man unrelated to the putative father” (Biedermann et al. 2014). Even though the relatives of the accused are potential fathers, considering such a far-fetched possibility would make the assessment of the evidence more difficult than needed (Evett et al. 2000).

Exclusive and exhaustive hypotheses guard against arbitrary comparisons and ensure a more principled assessment of the evidence. The drawback is that such hypotheses cover the entire space of possibilities, and sifting through this space is cognitively unfeasible (Allen 2017). In this respect, comparing more circumscribed hypotheses is preferable. The danger of doing so, however, is that likelihood ratios heavily depend on the hypotheses that are compared. The more latitude in the choice of the hypotheses, the more variable the likelihood ratio as a measure of evidentiary value.

2.4 The two-stain problem

A case study that further illustrates the limitations of the likelihood ratio as a measure of evidentiary strength is the two-stain problem, originally formulated by Evett (1987).

In Evett’s original formulation, two stains from two different sources are left at the crime scene, and the suspect’s blood matches one of them. Let the first hypothesis be that the suspect was one of the two men who committed the crime and the second hypothesis the negation of the first. Evett (1987) shows (see his paper for details) that the likelihood ratio of the match relative to these two hypotheses is \(\slashfrac{1}{2q_1}\) where \(q_1\) is the estimated frequency of the characteristics of the first stain. Surprisingly, the likelihood ratio does not depend on the frequency associated with the second stain.

Consider now a more complex two-stain scenario. Suppose a crime was committed by two people, who left two stains at the crime scene: one on a pillow and another on a sheet. John Smith, who was arrested for a different reason, genetically matches the DNA on the pillow, but not the one on the sheet. Meester and Sjerps (2004) argue that there are three plausible pairs of hypotheses associated with numerically different likelihood ratios (see their paper for details). The three options are listed in table 2, where \(R\) is the random match probability of Smith’s genetic profile and \(\delta\) the prior probability that Smith was one of the crime scene donors.

| \(H_p\) | \(H_d\) | LR |

|---|---|---|

| Smith was one of the crime scene donors. | Smith was not one of the crime scene donors. | \(\frac{R}{2}\) |

| Smith was the pillow stain donor. | Smith was not one of the crime scene donors. | \(R\) |

| Smith was the pillow stain donor. | Smith was not the pillow stain donor. | \(\frac{R(2-\delta)}{2(1-\delta)}\) |

Even though the likelihood ratios are numerically different, their posterior probabilities given the evidence are the same. Note that the prior odds of the three \(H_p\)’s in the table should be written in terms of \(\delta\). Following (Meester and Sjerps 2004), the prior odds of the first hypothesis in the table are \(\slashfrac{\delta}{(1-\delta)}\). The prior odds of the second hypothesis are \(\slashfrac{(\delta/2)}{(1-\delta)}\). The prior odds of the third hypothesis are \(\slashfrac{(\delta/2)}{(1-(\delta/2))}\). In each case, the posterior odds—the result of multiplying the prior odds by the likelihood ratio—are the same: \(R\times \slashfrac{\delta}{(2(1-\delta))}\). So despite differences in the likelihood ratio, the posterior odds of the different hypotheses are the same so long as the priors are appropriately related.

Meester and Sjerps (2004) recommend that each likelihood ratio should be accompanied by a tabular account of how a choice of prior odds (or prior probabilities) will impact the posterior odds, for a sensible range of priors (for a general discussion of this strategy, called “sensitivity analysis”, see earlier discussion in §1.4). In this way, the impact of the likelihood ratio is made clear, no matter the hypotheses chosen. This strategy concedes that likelihood ratios are insufficiently informative, and that they should be combined with other information, such as a range of priors, to allow for an adequate assessment of the evidence.

3. Bayesian Networks for Legal Applications

So far we examined how probability theory can help to assess single items of evidence such as a DNA match. But things are often more complicated. In legal cases, different lines of evidence may converge, such as two witnesses who testify that the defendant was seen at the crime scene, or they may diverge, such as a witness who asserts the defendant was seen at the crime scene while DNA testing shows no genetic match between the defendant and the scene. Another source of complexity is that the hypotheses put forward by the parties in a trial are often complex structures of statements. How can different statements, and their supporting evidence, be combined and the overall prosecutor’s case (or the defense’s case) be evaluated?

The probability of a hypothesis given multiple pieces of evidence can, in principle, be assessed by sequential applications of Bayes’ theorem. Consider, for example, a case in which the defendant faces two pieces of incriminating evidence: a DNA match and hair evidence found at the scene matching the defendant’s hair color. Assume—as is often done—that someone’s hair color is independent of someone’s genetic profile. Say the likelihood ratio of hair evidence is 40 and the likelihood ratio of the DNA match is 200, that is, it is 40 times more likely (and 200 times more likely) to find the evidence given the guilt hypothesis than the innocence hypothesis. If the prior odds of guilt to innocence are \(\slashfrac{1}{1000}\), the posterior odds would be \(\slashfrac{1}{1000}\times 40\times 200=8\).

These calculations are straightforward, but in more realistic cases, there will be complications. The parties at trial will often put forward several piecemeal claims that need to be combined together to form a theory of what happened. For example, the prosecutor may present eyewitness testimony to argue that the defendant ran away from the crime scene, along with documentary evidence as proof of a motive. The different piecemeal claims, each supported by distinct pieces of evidence, must be combined to form structured hypotheses about what happened. Since different claims and different pieces of evidence may depend on one another, direct calculations would soon become unmanageable. Fortunately, a tool exists to make the task easier: Bayesian networks. This section identifies guidelines for deploying Bayesian networks in the presentation, aggregation and evaluation of complex bodies of evidence and hypotheses.

3.1 Bayesian networks to the rescue

The idea that Bayesian networks can be used for probabilistic reasoning in legal fact-finding started gaining traction in late eighties (Friedman 1986) and early nineties (Edwards 1991). Two recent books on the topic with an emphasis on legal applications are Fenton and Neil 2013 [2018] and Taroni et al. 2014. A Bayesian network comprises two components: first, a directed acyclic graph of relations of dependence (represented by arrows) between variables (represented by nodes); second, conditional probability tables. Consider the graphical component first. The graph is acyclic because the arrows connecting the nodes do not form loops. As an illustration, let \(H\) be the claim that the suspect committed the murder, BT the presence of a blood type B match with a crime scene stain, and \(W\) the fact that an eyewitness observed the suspect near the scene around the time of the crime. The graphical component of the Bayesian network would look like this:

Figure 2

The ancestors of a node \(X\) are all those nodes from which we can reach \(X\) by following the arrows going forwards. The parents of a node \(X\) are those for which we can do this in one step. The descendants of \(X\) are all which can be reached from \(X\) by following the arrows going forward. The children are those for which we can do this in one step. In the example, \(H\) is the parent (and ancestor) of both \(W\) and BT, which are its children (and descendants). There are no non-parent ancestors or non-children descendants.

The variables, which are represented by nodes and are connected by arrows, stand in relation of probabilistic dependence. To describe these relations, the graphical model is accompanied by conditional probability tables. For parentless nodes such as \(H\), the tables specify the prior probabilities of all their possible states. Assuming \(H\) stands for a binary random variable, with two possible states, the prior probabilities could be:

| Prior | |

|---|---|

| \(H=\text{murderer}\) | .01 |

| \(H=\text{not.murderer}\) | .99 |

The .01 figure for \(H=\text{murderer}\) rests on the assumption that, absent any incriminating evidence, the defendant is unlikely to be guilty. For children nodes, the tables specify their conditional probability given combinations of their parents’ states. If the variables are binary, an assignment of values for them could be:

| \(H=\text{murderer}\) | \(H=\text{not.murderer}\) | |

|---|---|---|

| \(W=\text{seen}\) | .7 | .4 |

| \(W=\text{not.seen}\) | .3 | .6 |

| \(H=\text{murderer}\) | \(H=\text{not.murderer}\) | |

|---|---|---|

| \(\BT=\text{match}\) | 1 | .063 |

| \(\BT=\text{no.match}\) | 0 | .937 |

According to the tables above, even if the defendant is not the culprit, the eyewitness testimony would still incriminate him with probability of .4, while the blood evidence with probability equal to only .063. The blood type frequency estimate is realistic (Lucy 2013: 141), and so are the conditional probabilities for the eyewitness identification. As expected, eyewitness testimony is assumed to be less trustworthy than blood match evidence (but for complications about assessing eyewitness testimony, see Wixted and Wells 2017; Urbaniak et al. 2020).

The three probability tables above are all that is needed to define the probability distribution. The tables do not specify probabilistic dependencies between nodes that are not in a relation of child/parent, such as BT and \(W\). Since there is no arrow between them, nodes BT and \(W\) are assumed to be independent conditional on \(H\), that is, \(\Pr(W \pmid H)=\Pr(W \pmid H \wedge \BT)\). This fact represents, as part of the structure of the network, the independence between eyewitness testimony and blood evidence. A generalization of this fact is the so-called Markov condition (see the textbook by Neapolitan [2004] and the supplement on Bayesian networks of the entry on artificial intelligence.)

While the Bayesian network above—comprising a directed acyclic graph along with probability tables—is simple, a correct intuitive assessment of the probability of the hypothesis given the evidence is already challenging. Try to guess the probability that the defendant committed the murder (\(H=\text{murderer}\)) given the following states of the evidence:

-

The suspect’s blood type matches the crime stain but information about the witness is unavailable.

-

The suspect’s blood type matches the crime stain but the witness says they did not see the suspect near the crime scene.

-

The suspect’s blood type matches the crime stain and the witness says they saw the suspect near the crime scene.

Already at this level of complexity, calculations by hand become cumbersome. In contrast, software for Bayesian networks (see, for example, the \(\textsf{R}\) package \(\textsf{bnlearn}\) developed by Marco Scutari and described in Scutari and Denis 2015) will easily give the following results:

| \(H=\text{murderer}\) | |

|---|---|

| \(\BT=\text{match},W=?\) | .138 |

| \(\BT=\text{match},W=\text{not.seen}\) | .074 |

| \(\BT=\text{match}, W=\text{seen}\) | .219 |

Perhaps surprisingly the posterior probability of \(H\) = murderer is about .22 even when both pieces of evidence are incriminating (\(\BT=\text{match}, W=\text{seen}\)).

3.2 Idioms

While modeling the relationships between evidence and hypotheses, simple graphical patterns (called idioms) are often used. Complex graphical models can be constructed by combining these basic patterns in a modular way. General methods for constructing Bayesian networks are discussed in (Neil, Fenton, and Nielson 2000; Hepler, Dawid, and Leucari 2007) and general idioms are discussed in (Fenton, Neil, and Lagnado 2013). A few of the basic idioms are illustrated below.

The evidence idiom is the most basic graphical representation of the relation between a hypothesis and a piece of evidence:

Figure 3

This directed graph suggests that the direction of influence—which could, but need not, be interpreted as causal influence—goes from hypothesis to evidence (though the probabilistic dependence goes both ways). The hypothesis node and the evidence node can be binary variables, such as “The defendant was the source of the crime scene traces” (hypothesis) and “The defendant genetically matches the crime traces” (evidence). But the variables need not be binary. The hypothesis node might take values from the range of 1–40, say the distance in meters from which the gun was shot, and the evidence node might be a continuous variable representing the density of gun shot residues (Taroni et al. 2014).

A more complex idiom, called the evidence accuracy idiom, consists of two arrows going into the evidence node (Bovens and Hartmann 2004; Fenton, Neil, and Lagnado 2013). One incoming arrow comes from the hypothesis node and the other from the accuracy node. This idiom can be used to model, say, an alcohol test:

Figure 4

The directions of the arrows indicate that the accuracy of the evidence (accuracy node) and the alcohol level (hypothesis node) influence the outcome of the test (evidence node). The graphical model represents different sources of uncertainty. The uncertainty associated with the sensitivity and specificity of the test—that is, the probability that the tests reports excessive alcohol level when the level is excessive (sensitivity) and the probability that the test reports normal alcohol level when the level is normal (specificity)—is captured by the arrow going from the hypothesis node (Excess alcohol level) to the evidence node (Evidence for excess). Other sources of uncertainty comprise the possibility that the police officer lied about the test report or the possibility that the driver took medications which then affected the alcohol level. These possibilities can be taken into consideration by adding an accuracy node (or multiple accuracy nodes, if each factor is kept separate from the other).

When multiple items of evidence depend on one another—as may happen in many legal cases—this situation is modeled by the evidence dependency idiom. Following an example by Fenton and Neil (2013 [2018]), if one of two security cameras directed at the same location captured an image of someone who looks like the defendant but isn’t him, it is likely that the same person walked by the second camera, which also captured the same image. In such cases, presenting the second recording as independent from the first would lead to overestimating the strength of the evidence.

| Node | Proposition |

|---|---|

| H | Defendant present at the scene |

| C1 | Camera 1 captures image of a matching person |

| C2 | Camera 2 captures image of a matching person |

| D | What cameras capture is dependent |

Figure 5

The network structure is quite natural. The truth of the hypothesis, say, the defendant was present at the crime scene, influences whether the cameras capture an image of someone who looks like the defendant. However, if the two camera recordings are dependent on one another (for instance, they are directed at the same spot with a similar angle), the fact that the second camera captured the same image as the first does not make the hypothesis more likely once the first camera recording is known.

Finally, the scenario idiom can model complex hypotheses, consisting of a sequence of events organized in space and time (a scenario). A graphical model that uses the scenario idiom would consist of the following components: first, nodes for the states and events in the scenario, with each node linked to the supporting evidence; second, a separate scenario node that has states and events as its children; finally, a node corresponding to the ultimate hypothesis as a child of the scenario node. The graphical model would look like this (Vlek et al. 2014):

Figure 6 [An extended description of figure 6 is in the supplement.]

The scenario node unifies the different events and states. Because of this unifying role, increasing the probability of one part of the scenario (say State/event 2) will also increase the probability of the other parts (State/event 1 and State/event 3). This captures the fact that the different components of a scenario form an interconnected sequence of events.

A discussion of modeling crime scenarios by means of other graphical devices (called structural scenario spaces) mixed with probabilities can be found in the work of Shen et al. (2007); Bex (2011, 2015); and Verheij (2017). See also the survey by Di Bello and Verheij (2018). Dawid and Mortera (2018) give a treatment of scenarios in terms of Bayesian networks. Lacave and Díez (2002) show how Bayesian Network can be used to construct explanations.

3.3 Modeling an entire case

Kadane and Schum (2011) made one of the first attempts to model an entire criminal case, Sacco and Vanzetti from 1920, using probabilistic graphs. More recently, Fenton and Neil (2013 [2018]) constructed a Bayesian network for the Sally Clark case (discussed earlier in §1.3), reproduced below:

Figure 7 [An extended description of figure 7 is in the supplement.]

The arrows depict relationships of influence between variables. Whether Sally Clark’s sons, call them \(A\) and \(B\), died by SIDS or murder (A.cause and B.cause) influences whether signs of disease (A.disease and B.disease) and bruising (A.bruising and B.bruising) were present. Since son \(A\) died first, whether \(A\) was murdered or died by SIDS (A.cause) influences how son \(B\) died (B.cause). How the sons died determines how many sons were murdered (No.murdered), and how many sons were murdered decides whether Sally Clark is guilty (Guilty).

According to the calculation by Fenton and Neil (2013 [2018]) (see their paper for details), the prior probability of Guilty = Yes should be .0789. After taking into account the incriminating evidence presented at trial, such as that there were signs of bruising but no signs of a preexisting disease affecting the children, the posterior probabilities are as follows:

| Evidence (cumulative) | \(\Pr(\textrm{Clark guilty})\) |

|---|---|

| A bruising | .2887 |

| A no signs of disease | .3093 |

| B bruising | .6913 |

| B no signs of disease | .7019 |

The incriminating evidence, combined, brings the probability of guilt from .0789 to .7019. This is a significant increase, but not quite enough for a conviction. If one wishes to perform sensitivity analysis—see earlier discussion in §1.4—by modifying some of the probabilities, this can be easily done. During the appeal trial, new evidence was discovered, in particular, evidence that son \(A\) was affected by a disease. Once this evidence is taken into account, the probability of guilt drops to .00459 (and if signs of disease were also present on \(B\), the guilt probability would drop even further to .0009). For a general discussion on how to elicit probabilities, see Renooij (2001) and Gaag et al. (1999).

4. Relevance

The preceding sections modeled evidence assessment, using Bayes’ theorem (Section 1), likelihood ratios (Section 2) and Bayesian networks (Section 3). Evidence assessment, however, begins with a preliminary decision, the identification of relevant evidence. Once a piece of evidence is deemed relevant, the next step is to assess its strength (probative value, weight). This section discusses how probability theory helps to identify relevant evidence.

4.1 Likelihood ratios

The U.S. Federal Rules of Evidence define relevant evidence as evidence that has

any tendency to make the existence of any fact that is of consequence to the determination of the action more probable or less probable than it would be without the evidence (rule 401).

This definition is formulated in a probabilistic language. Legal probabilists interpret it using the likelihood ratio, a standard probabilistic measure of evidential relevance (Aitken et al. 2010; Aitken and Taroni 1995 [2004]; Lempert 1977; Lyon and Koehler 1996; Sullivan 2019). The likelihood ratio (discussed in Section 2) is the probability of observing the evidence given the prosecutor’s or plaintiff’s hypothesis, divided by the probability of observing the same evidence given the defense’s hypothesis.

Let \(E\) be the evidence, \(H\) the prosecutor’s or plaintiff’s hypothesis, and \(H'\) the defense’s hypothesis. The likelihood ratio is defined as follows:

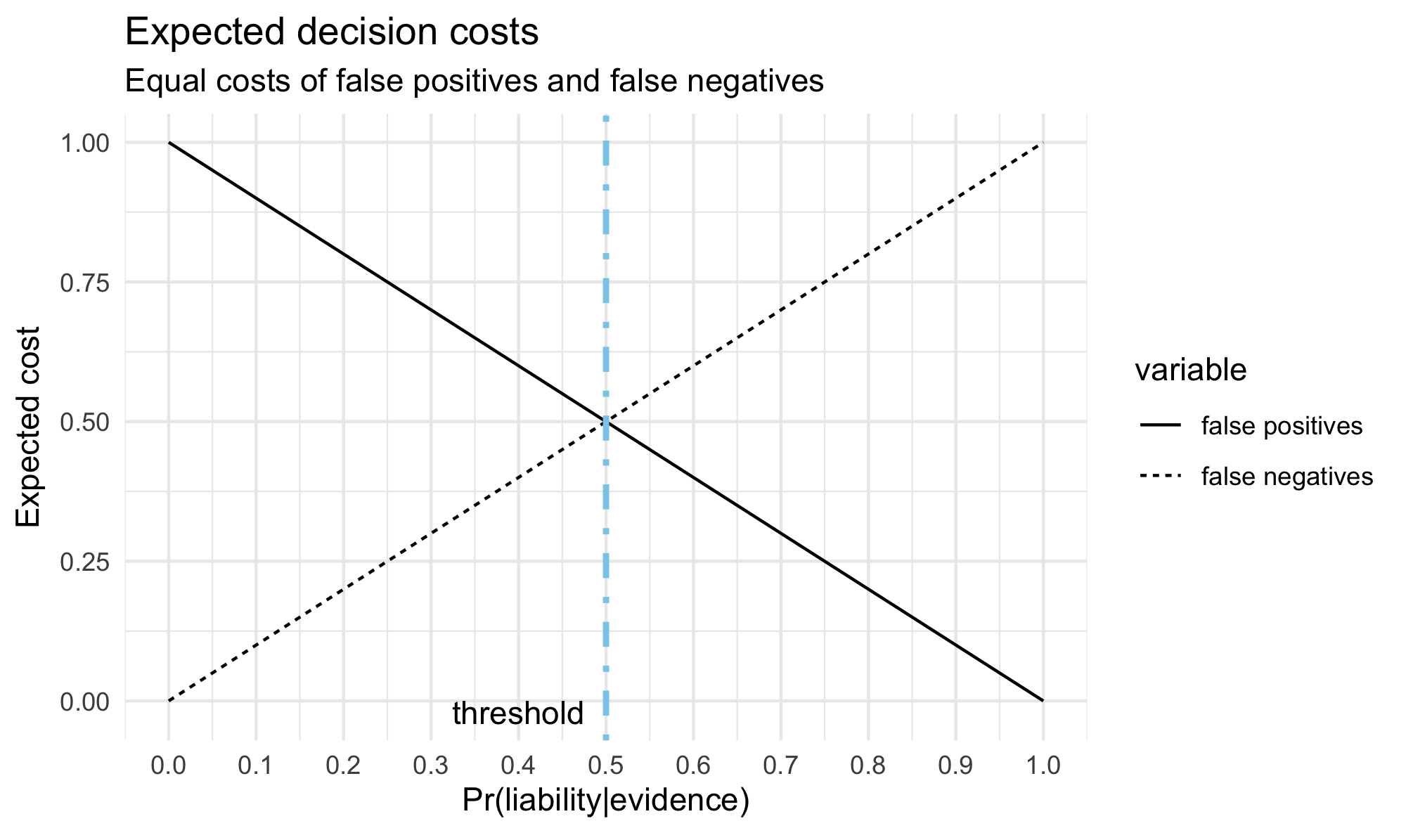

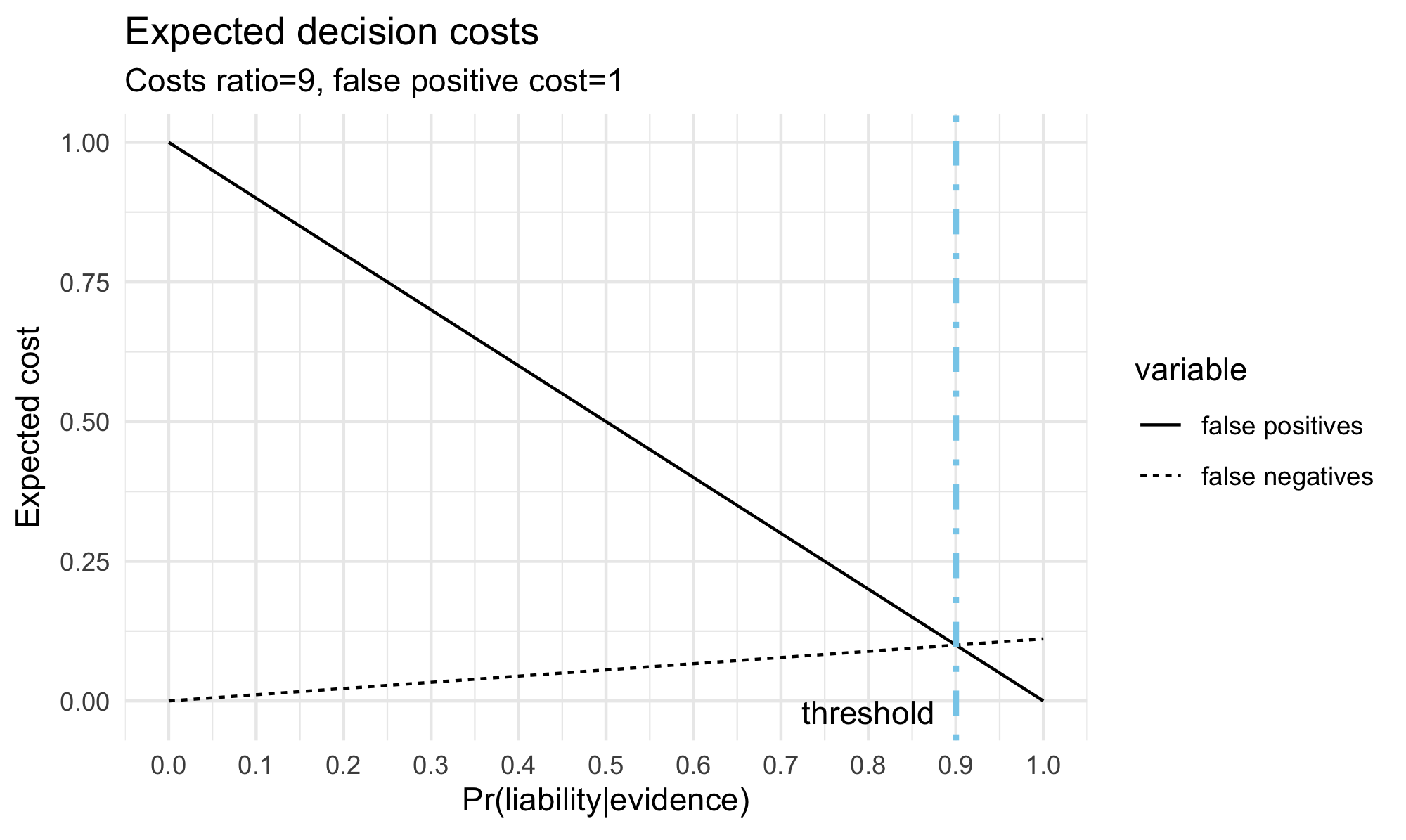

\[ LR(E,H,H') = \frac{P(E\pmid H)}{P(E\pmid H')}\]On the likelihood ratio interpretation, relevance depends on the choice of the competing hypotheses. A piece of evidence is relevant—in relation to a pair of hypotheses \(H\) and \(H'\)—provided the likelihood ratio \(LR(E, H, H')\) is different from one and irrelevant otherwise. For example, the bloody knife found in the suspect’s home is relevant evidence in favor of the prosecutor’s hypothesis because we think it is far more likely to find such evidence if the suspect committed the crime (prosecutor’s hypothesis) than if he didn’t (defense hypothesis) (Finkelstein 2009). In general, for values greater than one, \(LR(E, H, H')>1\), the evidence supports the prosecutor’s or plaintiff’s hypothesis \(H\), and for values below one, \(LR(E, H, H')<1\), the evidence supports the defense’s hypothesis \(H'\). If the evidence is equally likely under either hypothesis, \(LR(E, H, H')=1\), the evidence is irrelevant.