Modularity of Mind

The concept of modularity has loomed large in philosophy of psychology since the early 1980s, following the publication of Fodor’s landmark book The Modularity of Mind (1983). In the decades since the term ‘module’ and its cognates first entered the lexicon of cognitive science, the conceptual and theoretical landscape in this area has changed dramatically. Especially noteworthy in this respect has been the development of evolutionary psychology, whose proponents adopt a less stringent conception of modularity than the one advanced by Fodor, and who argue that the architecture of the mind is more pervasively modular than Fodor claimed. Where Fodor (1983, 2000) draws the line of modularity at the relatively low-level systems underlying perception and language, post-Fodorian theorists such as Sperber (2002) and Carruthers (2006) contend that the mind is modular through and through, up to and including the high-level systems responsible for reasoning, planning, decision making, and the like. The concept of modularity has also figured in recent debates in philosophy of science, epistemology, ethics, and philosophy of language—further evidence of its utility as a tool for theorizing about mental architecture.

- 1. What is a mental module?

- 2. Modularity, Fodor-style: A modest proposal

- 3. Post-Fodorian modularity

- 4. Modularity and the border between perception and cognition

- 5. Modularity and philosophy

- Bibliography

- Academic Tools

- Other Internet Resources

- Related Entries

1. What is a mental module?

In his classic introduction to modularity, Fodor (1983) lists nine features that collectively characterize the type of system that interests him. In original order of presentation, they are:

- Domain specificity

- Mandatory operation

- Limited central accessibility

- Fast processing

- Informational encapsulation

- ‘Shallow’ outputs

- Fixed neural architecture

- Characteristic and specific breakdown patterns

- Characteristic ontogenetic pace and sequencing

A cognitive system counts as modular in Fodor’s sense if it is modular “to some interesting extent,” meaning that it has most of these features to an appreciable degree (Fodor, 1983, p. 37). This is a weighted most, since some marks of modularity are more important than others. Information encapsulation, for example, is more or less essential for modularity, as well as explanatorily prior to several of the other features on the list (Fodor, 1983, 2000).

Each of the items on the list calls for explication. To streamline the exposition, we will cluster most of the features thematically and examine them on a cluster-by-cluster basis, along the lines of Prinz (2006).

Encapsulation and inaccessibility. Informational encapsulation and limited central accessibility are two sides of the same coin. Both features pertain to the character of information flow across computational mechanisms, albeit in opposite directions. Encapsulation involves restriction on the flow of information into a mechanism, whereas inaccessibility involves restriction on the flow of information out of it.

A cognitive system is informationally encapsulated to the extent that in the course of processing its inputs it cannot access information stored elsewhere; all it has to go on is the information contained in those inputs plus whatever information might be stored within the system itself, for example, in a proprietary database (Fodor, 2000, pp. 62–63). (Note that items of information drawn on by a system in the course of its operations do not count as inputs to the system; otherwise the encapsulation criterion of modularity would be trivially satisfied.) In the case of language, for example:

A parser for [a language] L contains a grammar of L. What it does when it does its thing is, it infers from certain acoustic properties of a token to a characterization of certain of the distal causes of the token (e.g., to the speaker’s intention that the utterance should be a token of a certain linguistic type). Premises of this inference can include whatever information about the acoustics of the token the mechanisms of sensory transduction provide, whatever information about the linguistic types in L the internally represented grammar provides, and nothing else. (Fodor, 1984, pp. 245–246; italics in original)

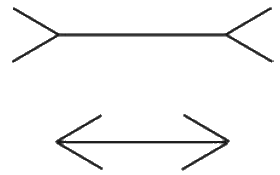

Similarly, in the case of perception—understood as a kind of non-demonstrative (i.e., defeasible, or non-monotonic) inference from sensory ‘premises’ to perceptual ‘conclusions’—the claim that perceptual systems are informationally encapsulated is equivalent to the claim that “the data that can bear on the confirmation of perceptual hypotheses includes, in the general case, considerably less than the organism may know” (Fodor, 1983, p. 69). The classic illustration of this property comes from the study of visual illusions, which tend to persist even after the viewer is explicitly informed about the character of the stimulus. In the Müller-Lyer illusion, for example, the two lines continue to look as if they were of unequal length even after one has convinced oneself otherwise, e.g., by measuring them with a ruler (see Figure 1, below).

Figure 1. The Müller-Lyer illusion.

Informational encapsulation is related to what Pylyshyn (1984, 1999) calls cognitive impenetrability, but the two properties are not the same. To a first approximation, a system is cognitively impenetrable if its operations are not subject to direct influence by information stored in central memory, paradigmatically in the form of beliefs and utilities. As such, information encapsulation entails cognitive impenetrability, but not the other way around. For example, auditory speech perception might be cognitively impenetrable but draw on visual information, as suggested by the McGurk effect (but see §2.1). The relation between informational encapsulation and cognitive impenetrability is, however, a matter of controversy (see §4).

The flip side of informational encapsulation is inaccessibility to central monitoring. A system is inaccessible in this sense if the intermediate-level representations that it computes prior to producing its output are inaccessible to consciousness, and hence unavailable for explicit report. In effect, centrally inaccessible systems are those whose internal processing is opaque to introspection. Though the outputs of such systems may be phenomenologically salient, their precursor states are not. Speech comprehension, for example, likely involves the successive elaboration of myriad representations (of various types: phonological, lexical, syntactic, etc.) of the stimulus, but of these only the final product—the representation of the meaning of what was said—is consciously available.

Mandatoriness, speed, and superficiality. In addition to being informationally encapsulated and centrally inaccessible, modular systems and processes are “fast, cheap, and out of control” (to borrow a phrase by roboticist Rodney Brooks). These features form a natural trio, as we’ll see.

The operation of a cognitive system is mandatory just in case it is automatic, that is, not under conscious control (Bargh & Chartrand, 1999). This means that, like it or not, the system’s operations are switched on by presentation of the relevant stimuli and those operations run to completion. For example, native speakers of English cannot hear the sounds of English being spoken as mere noise: if they hear those sounds at all, they hear them as English. Likewise, it’s impossible to see a 3D array of objects in space as 2D patches of color, however hard one may try.

Speed is arguably the mark of modularity that requires least in the way of explication. But speed is relative, so the best way to proceed here is by way of examples. Speech shadowing is generally considered to be very fast, with typical lag times on the order of about 250 ms. Since the syllabic rate of normal speech is about 4 syllables per second, this suggests that shadowers are processing the stimulus in syllabus-length bits—probably the smallest bits that can be identified in the speech stream, given that “only at the level of the syllable do we begin to find stretches of wave form whose acoustic properties are at all reliably related to their linguistic values” (Fodor, 1983, p. 62). Similarly impressive results are available for vision: in a rapid serial visual presentation task (matching picture to description), subjects were 70% accurate at 125 ms. exposure per picture and 96% accurate at 167 ms. (Fodor, 1983, p. 63). In general, a cognitive process counts as fast in Fodor’s book if it takes place in a half second or less.

A further feature of modular systems is that their outputs are relatively ‘shallow’. Exactly what this means is unclear. But the depth of an output seems to be a function of at least two properties: first, how much computation is required to produce it (i.e., shallow means computationally cheap); second, how constrained or specific its informational content is (i.e., shallow means informationally general) (Fodor, 1983, p. 87). These two properties are correlated, in that outputs with more specific content tend to be more costly for a system to compute, and vice versa. Some writers have interpreted shallowness to require non-conceptual character (e.g., Carruthers, 2006, p. 4). But this conflicts with Fodor’s own gloss on the term, in which he suggests that the output of a plausibly modular system such as visual object recognition might be encoded at the level of ‘basic-level’ concepts, like DOG and CHAIR (Rosch et al., 1976). What’s ruled out here is not concepts per se, then, but highly theoretical concepts like PROTON, which are too informationally specific and too computationally expensive to meet the shallowness criterion.

All three of the features just discussed—mandatoriness, speed, and shallowness—are associated with, and to some extent explicable in terms of, informational encapsulation. In each case, less is more, informationally speaking. Mandatoriness flows from the insensitivity of the system to the organism’s utilities, which is one dimension of cognitive impenetrability. Speed depends upon the efficiency of processing, which positively correlates with encapsulation in so far as encapsulation tends to reduce the system’s informational load. Shallowness is a similar story: shallow outputs are computationally cheap, and computational expense is negatively correlated with encapsulation. In short, the more informationally encapsulated a system is, the more likely it is to be fast, cheap, and out of control.

Dissociability and localizability. To say that a system is functionally dissociable is to say that it can be selectively impaired, that is, damaged or disabled with little or no effect on the operation of other systems. As the neuropsychological record indicates, selective impairments of this sort have frequently been observed as a consequence of circumscribed brain lesions. Standard examples from the study of vision include prosopagnosia (impaired face recognition), achromatopsia (total color blindness), and akinetopsia (motion blindness); examples from the study of language include agrammatism (loss of complex syntax), jargon aphasia (loss of complex semantics), alexia (loss of object words), and dyslexia (impaired reading and writing). Each of these disorders have been found in otherwise cognitively normal individuals, suggesting that the lost capacities are subserved by functionally dissociable mechanisms.

Functional dissociability is associated with neural localizability in a strong sense. A system is strongly localized just in case it is (a) implemented in neural circuitry that is both relatively circumscribed in extent (though not necessarily in contiguous areas) and (b) dedicated to the realization of that system alone. Localization in this sense goes beyond mere implementation in local neural circuitry, since a given bit of circuitry could subserve more than one cognitive function (Anderson, 2010). Proposed candidates for strong localization include systems for color vision (V4), motion detection (MT), face recognition (fusiform gyrus), and spatial scene recognition (parahippocampal gyrus).

Domain specificity. A system is domain specific to the extent that it has a restricted subject matter, that is, the class of objects and properties that it processes information about is circumscribed in a relatively narrow way. As Fodor (1983) puts it, “domain specificity has to do with the range of questions for which a device provides answers (the range of inputs for which it computes analyses)” (p. 103): the narrower the range of inputs a system can compute, the narrower the range of problems the system can solve—and the narrower the range of such problems, the more domain specific the device. Alternatively, the degree of a system’s domain specificity can be understood as a function of the range of inputs that turn the system on, where the size of that range determines the informational reach of the system (Carruthers, 2006; Samuels, 2000).

Domains (and by extension, modules) are typically more fine-grained than sensory modalities like vision and audition. This seems clear from Fodor’s list of plausibly domain-specific mechanisms, which includes systems for color perception, visual shape analysis, sentence parsing, and face and voice recognition (Fodor, 1983, p. 47)—none of which correspond to perceptual or linguistic faculties in an intuitive sense. It also seems plausible, however, that the traditional sense modalities (vision, audition, olfaction, etc.), and the language faculty as a whole, are sufficiently domain specific to count as displaying this particular mark of modularity (McCauley & Henrich, 2006).

Innateness. The final feature of modular systems on Fodor’s roster is innateness, understood as the property of “develop[ing] according to specific, endogenously determined patterns under the impact of environmental releasers” (Fodor, 1983, p. 100). On this view, modular systems come on-line chiefly as the result of a brute-causal process like triggering, rather than an intentional-causal process like learning. (For more on this distinction, see Cowie, 1999; for an alternative analysis of innateness, based on the notion of canalization, see Ariew, 1999.) The most familiar example here is language, the acquisition of which occurs in all normal individuals in all cultures on more or less the same schedule: single words at 12 months, telegraphic speech at 18 months, complex grammar at 24 months, and so on (Stromswold, 1999). Other candidates include visual object perception (Spelke, 1994) and low-level mindreading (Scholl & Leslie, 1999).

2. Modularity, Fodor-style: A modest proposal

The hypothesis of modest modularity, as we shall call it, has two strands. The first strand of the hypothesis is positive. It says that input systems, such as systems involved in perception and language, are modular. The second strand is negative. It says that central systems, such as systems involved in belief fixation and practical reasoning, are not modular.

In this section, we assess the case for modest modularity. The next section (§3) will be devoted to discussion of the hypothesis of massive modularity, which retains the positive strand of Fodor’s hypothesis while reversing the polarity of the second strand from negative to positive—revising the concept of modularity in the process.

The positive part of the modest modularity hypothesis is that input systems are modular. By ‘input system’ Fodor (1983) means a computational mechanism that “presents the world to thought” (p. 40) by processing the outputs of sensory transducers. A sensory transducer is a device that converts the energy impinging on the body’s sensory surfaces, such as the retina and cochlea, into a computationally usable form, without adding or subtracting information. Roughly speaking, the product of sensory transduction is raw sensory data. Input processing involves non-demonstrative inferences from this raw data to hypotheses about the layout of objects in the world. These hypotheses are then passed on to central systems for the purpose of belief fixation, and those systems in turn pass their outputs to systems responsible for the production of behavior.

Fodor argues that input systems constitute a natural kind, defined as “a class of phenomena that have many scientifically interesting properties over and above whatever properties define the class” (Fodor, 1983, p. 46). He argues for this by presenting evidence that input systems are modular, where modularity is marked by a cluster of psychologically interesting properties—the most interesting and important of these being informational encapsulation. In the course of that discussion, we reviewed a representative sample of this evidence, and for present purposes that should suffice. (Readers interested in further details should consult Fodor, 1983, pp. 47–101.)

2.1 Challenges to low-level modularity

Fodor’s claim about the modularity of input systems has been disputed by a number of philosophers and psychologists (Churchland, 1988; Arbib, 1987; Marslen-Wilson & Tyler, 1987; McCauley & Henrich, 2006). The most wide-ranging philosophical critique is due to Prinz (2006), who argues that perceptual and linguistic systems rarely exhibit the features characteristic of modularity. In particular, he argues that such systems are not informationally encapsulated. To this end, Prinz adduces two types of evidence. First, there appear to be cross-modal effects in perception, which would tell against encapsulation at the level of input systems. The classic example of this, also from the speech perception literature, is the McGurk effect (McGurk & MacDonald, 1976). Here, subjects watching a video of one phoneme being spoken (e.g., /ga/) dubbed with a sound recording of a different phoneme (/ba/) hear a third, altogether different phoneme (/da/). Second, he points to what look to be top-down effects on visual and linguistic processing, the existence of which would tell against cognitive impenetrability, i.e., encapsulation relative to central systems. Some of the most striking examples of such effects come from research on speech perception. Probably the best-known is the phoneme restoration effect, as in the case where listeners ‘fill in’ a missing phoneme in a spoken sentence (The state governors met with their respective legi*latures convening in the capital city) from which the missing phoneme (the /s/ sound in legislatures) has been deleted and replaced with the sound of a cough (Warren, 1970). By hypothesis, this filling-in is driven by listeners’ understanding of the linguistic context.

How convincing one finds this part of Prinz’s critique, however, depends on how convincing one finds his explanation of these effects. The McGurk effect, for example, seems consistent with the claim that speech perception is an informationally encapsulated system, albeit a system that is multi-modal in character (cf. Fodor, 1983, p.132n.13). If speech perception is a multi-modal system, the fact that its operations draw on both auditory and visual information need not undermine the claim that speech perception is encapsulated. Other cross-modal effects, however, resist this type of explanation. In the double flash illusion, for example, viewers shown a single flash accompanied by two beeps report seeing two flashes (Shams et al., 2000). The same goes for the rubber hand illusion, in which synchronous brushing of a hand hidden from view and a realistic-looking rubber hand seen at the usual location of the hand that was hidden gives rise to the impression that the fake hand is real (Botvinick & Cohen, 1998). With respect to phenomena of this sort, unlike the McGurk effect, there is no plausible candidate for a single, domain-specific system whose operations draw on multiple sources of sensory information.

Regarding phoneme restoration, it could be that the effect is driven by listeners’ drawing on information stored in a language-proprietary database (specifically, information about the linguistic types in the lexicon of English), rather than higher-level contextual information. Hence, it’s unclear whether the case of phoneme restoration described above counts as a top-down effect. But not all cases of phoneme restoration can be accommodated so readily, since the phenomenon also occurs when there are multiple lexical items available for filling in (Warren & Warren, 1970). For example, listeners fill the gap in the sentences The *eel is on the axle and The *eel is on the orange differently—with a /wh/ sound and a /p/ sound, respectively—suggesting that speech perception is sensitive to contextual information after all.

A further challenge to modest modularity, not addressed by Prinz (2006), comes from evidence that susceptibility to the Müller-Lyer illusion varies by both culture and age. For example, it appears that adults in Western cultures are more susceptible to the illusion than their non-Western counterparts; that adults in some non-Western cultures, such as hunter-gatherers from the Kalahari Desert, are nearly immune to the illusion; and that within (but not always across) Western and non-Western cultures, pre-adolescent children are more susceptible to the illusion than adults are (Segall, Campbell, & Herskovits, 1966). McCawley and Henrich (2006) take these findings as showing that the visual system is diachronically (as opposed to synchronically) penetrable, in that how one experiences the illusion-inducing stimulus changes as a result of one’s wider perceptual experience over an extended period of time. They also argue that the aforementioned evidence of cultural and developmental variability in perception militates against the idea that vision is an innate capacity, that is, the idea that vision is among the “endogenous features of the human cognitive system that are, if not largely fixed at birth, then, at least, genetically pre-programmed” and “triggered, rather than shaped, by the newborn’s subsequent experience” (p. 83). However, they also issue the following caveat:

[N]othing about any of the findings we have discussed establishes the synchronic cognitive penetrability of the Müller-Lyer stimuli. Nor do the Segall et al. (1966) findings provide evidence that adults’ visual input systems are diachronically penetrable. They suggest that it is only during a critical developmental stage that human beings’ susceptibility to the Müller-Lyer illusion varies considerably and that that variation substantially depends on cultural variables. (McCauley & Henrich, 2006, p. 99; italics in original)

As such, the evidence cited can be accommodated by friends of modest modularity, provided that allowance is made for the potential impact of environmental, including cultural, variables on development—something that most accounts of innateness make room for.

A useful way of making this point invokes Segal’s (1996) idea of diachronic modularity (see also Scholl & Leslie, 1999). Diachronic modules are systems that exhibit parametric variation over the course of their development. For example, in the case of language, different individuals learn to speak different languages depending on the linguistic environment in which they grew up, but they nonetheless share the same underlying linguistic competence in virtue of their (plausibly innate) knowledge of Universal Grammar. Given the observed variation in how people see the Müller-Lyer illusion, it may be that the visual system is modular in much the same way, with its development is constrained by features of the visual environment. Such a possibility seems consistent with the claim that input systems are modular in Fodor’s sense.

Another source of difficulty for proponents of input-level modularity is neuroscientific evidence against the claim that perceptual and linguistic systems are strongly localized. Recall that for a system to be strongly localized, it must be realized in dedicated neural circuitry. Strong localization at the level of input systems, then, entails the existence of a one-to-one mapping between input systems and brain structures. As Anderson (2010, 2014) argues, however, there is no such mapping, since most cortical regions of any size are deployed in different tasks across different domains. For instance, activation of the fusiform face area, once thought to be dedicated to the perception of faces, is also recruited for the perception of cars and birds (Gauthier et al., 2000). Likewise, Broca’s area, once thought to be dedicated to speech production, also plays a role in action recognition, action sequencing, and motor imagery (Tettamanti & Weniger, 2006). Functional neuroimaging studies generally suggest that cognitive systems are at best weakly localized, that is, implemented in distributed networks of the brain that overlap, rather than discrete and disjoint regions.

Arguably the most serious challenge to modularity at the level of input systems, however, comes from evidence that vision is cognitively penetrable, and hence, not informationally encapsulated. The concept of cognitive penetrability, originally introduced by Pylyshyn (1984), has been characterized in a variety of non-equivalent ways (Stokes, 2013), but the core idea is this: A perceptual system is cognitively penetrable if and only if its operations are directly causally sensitive, in a semantically coherent way, to the agent’s beliefs, desires, intentions, or other nonperceptual states. Behavioral studies purporting to show that vision is cognitively penetrable date back to the early days of New Look psychology (Bruner and Goodman, 1947) and continue to the present day, with renewed interest in the topic emerging in the early 2000s (Firestone & Scholl, 2016). It appears, for example, that vision is influenced by an agent’s motivational states, with experimental subjects reporting that desirable objects look closer (Balcetis & Dunning, 2010) and ambiguous figures look like the interpretation associated with a more rewarding outcome (Balcetis & Dunning, 2006). In addition, vision seems to be influenced by subjects’ beliefs, with racial categorization affecting reports of the perceived skin tone of faces even when the stimuli are equiluminant (Levin & Banaji, 2006), and categorization of objects affecting reports of the perceived color of grayscale images of those objects (Hansen et al., 2006).

Skeptics of cognitive penetrability point out, however, that experimental evidence for top-down effects on perception can be explained in terms of effects of judgment, memory, and relatively peripheral forms of attention (Firestone & Scholl, 2016; Machery, 2015). Consider, for example, the claim that throwing a heavy ball (vs. a light ball) at a target makes the target look farther away, evidence for which consists of subjects’ visual estimates of the distance to the target (Witt, Proffitt, & Epstein, 2004). While it is possible that the greater effort involved in throwing the heavy ball caused the target to look farther away, it is also possible that the increased estimate of distance reflected the fact that subjects in the heavy ball condition judged the target to be farther away because they found it harder to hit (Firestone & Scholl, 2016). Indeed, reports by subjects in a follow-up study who were explicitly instructed to make their estimates on the basis of visual appearances only did not show the effect of effort, suggesting that the effect was post-perceptual (Woods, Philbeck, & Danoff, 2009). Other purported top-down effects on perception, such as the effect of golfing performance on size and distance estimates of golf holes (Witt et al., 2008), can be explained as effects of spatial attention, such as the fact that visually attended objects tend to appear larger and closer (Firestone & Scholl, 2016). These and related considerations suggest that the case for cognitive penetrability—and by extension, the case against low-level modularity—is weaker than its proponents make it out to be.

2.2 Fodor’s argument against high-level modularity

I turn now to the dark side of Fodor’s hypothesis: the claim that central systems are not modular.

Among the principal jobs of central systems is the fixation of belief, perceptual belief included, via non-demonstrative inference. Fodor (1983) argues that this sort of process cannot be realized in an informationally encapsulated system, and hence that central systems cannot be modular. Spelled out a bit further, his reasoning goes like this:

- Central systems are responsible for belief fixation.

- Belief fixation is isotropic and Quinean.

- Isotropic and Quinean processes cannot be carried out by informationally encapsulated systems.

- Belief fixation cannot be carried out by an informationally encapsulated system. [from 2 and 3]

- Modular systems are informationally encapsulated.

- Belief fixation is not modular. [from 4 and 5]

Hence:

- Central systems are not modular. [from 1 and 6]

The argument here contains two terms that call for explication, both of which relate to the notion of confirmation holism in the philosophy of science. The term ‘isotropic’ refers to the epistemic interconnectedness of beliefs in the sense that “everything that the scientist knows is, in principle, relevant to determining what else he ought to believe. In principle, our botany constrains our astronomy, if only we could think of ways to make them connect” (Fodor, 1983, p. 105). Antony (2003) presents a striking case of this sort of long-range interdisciplinary cross-talk in the sciences, between astronomy and archaeology; Carruthers (2006, pp. 356–357) furnishes another example, linking solar physics and evolutionary theory. On Fodor’s view, since scientific confirmation is akin to belief fixation, the fact that scientific confirmation is isotropic suggests that belief fixation in general has this property.

A second dimension of confirmation holism is that confirmation is ‘Quinean’, meaning that:

[T]he degree of confirmation assigned to any given hypothesis is sensitive to properties of the entire belief system … simplicity, plausibility, and conservatism are properties that theories have in virtue of their relation to the whole structure of scientific beliefs taken collectively. A measure of conservatism or simplicity would be a metric over global properties of belief systems. (Fodor, 1983, pp. 107–108; italics in original).

Here again, the analogy between scientific thinking and thinking in general underwrites the supposition that belief fixation is Quinean.

Both isotropy and Quineanness are features that preclude encapsulation, since their possession by a system would require extensive access to the contents of central memory, and hence a high degree of cognitive penetrability. Put in slightly different terms: isotropic and Quinean processes are ‘global’ rather than ‘local’, and since globality precludes encapsulation, isotropy and Quineanness preclude encapsulation as well.

By Fodor’s lights, the upshot of this argument—namely, the nonmodular character of central systems—is bad news for the scientific study of higher cognitive functions. This is neatly expressed by his “First Law of the Non-Existence of Cognitive Science,” according to which “[t]he more global (e.g., the more isotropic) a cognitive process is, the less anybody understands it” (Fodor, 1983, p. 107). His grounds for pessimism on this score are twofold. First, global systems are unlikely to be associated with local brain architecture, thereby rendering them unpromising objects of neuroscientific study:

We have seen that isotropic systems are unlikely to exhibit articulated neuroarchitecture. If, as seems plausible, neuroarchitecture is often a concomitant of constraints on information flow, then neural equipotentiality is what you would expect in systems in which every process has more or less uninhibited access to all the available data. The moral is that, to the extent that the existence of form/function correspondence is a precondition for successful neuropsychological research, there is not much to be expected in the way of a neuropsychology of thought (Fodor, 1983, pp. 127).

Second, and more importantly, global processes are resistant to computational explanation, making them unpromising objects of psychological study:

The fact is that—considerations of their neural realization to one side—global systems are per se bad domains for computational models, at least of the sort that cognitive scientists are accustomed to employ. The condition for successful science (in physics, by the way, as well as psychology) is that nature should have joints to carve it at: relatively simple subsystems which can be artificially isolated and which behave, in isolation, in something like the way that they behave in situ. Modules satisfy this condition; Quinean/isotropic-wholistic-systems by definition do not. If, as I have supposed, the central cognitive processes are nonmodular, that is very bad news for cognitive science (Fodor, 1983, pp. 128).

By Fodor’s lights, then, considerations that militate against high-level modularity also militate against the possibility of a robust science of higher cognition—not a happy result, as far as most cognitive scientists and philosophers of mind are concerned.

Gloomy implications aside, Fodor’s argument against high-level modularity is difficult to resist. The main sticking points are these: first, the negative correlation between globality and encapsulation; second, the positive correlation between encapsulation and modularity. Putting these points together, we get a negative correlation between globality and modularity: the more global the process, the less modular the system that executes it. As such, there seem to be only three ways to block the conclusion of the argument:

- Deny that central processes are global.

- Deny that globality and encapsulation are negatively correlated.

- Deny that encapsulation and modularity are positively correlated.

Of these three options, the second seems least attractive, as it seems something like a conceptual truth that globality and encapsulation pull in opposite directions. The first option is slightly more appealing, but only slightly. The idea that central processes are relatively global, even if not as global as the process of confirmation in science suggests, is hard to deny. And that is all the argument really requires.

That leaves the third option: denying that modularity requires encapsulation. This is, in effect, the strategy pursued by Carruthers (2006). More specifically, Carruthers draws a distinction between two kinds of encapsulation: “narrow-scope” and “wide-scope.” A system is narrow-scope encapsulated if it cannot draw on any information held outside of it in the course of its processing. This corresponds to encapsulation as Fodor uses the term. By contrast, a system that is wide-scope encapsulated can draw on exogenous information during the course of its operations—it just cannot draw on all of that information. (Compare: “No exogenous information is accessible” vs. “Not all exogenous information is accessible.”) This is encapsulation in a weaker sense of the term than Fodor’s. Indeed, Carruthers’s use of the term “encapsulation” in this context is somewhat misleading, insofar as wide-scope encapsulated systems count as unencapsulated in Fodor’s sense (Prinz, 2006).

Dropping the narrow-scope encapsulation requirement on modules raises a number of issues, not the least of which being that it reduces the power of modularity hypotheses to explain functional dissociations at the system level (Stokes & Bergeron, 2015). That said, if modularity requires only wide-scope encapsulation, then Fodor’s argument against central modularity no longer goes through. But given the importance of narrow-scope encapsulation to Fodorian modularity, all this shows is that central systems might be modular in a non-Fodorian way. The original argument that central systems are not Fodor-modular—and with it, the motivation for the negative strand of the modest modularity hypothesis—stands.

3. Post-Fodorian modularity

According to the massive modularity hypothesis, the mind is modular through and through, including the parts responsible for high-level cognition functions like belief fixation, problem-solving, planning, and the like. Originally articulated and advocated by proponents of evolutionary psychology (Sperber, 1994, 2002; Cosmides & Tooby, 1992; Pinker, 1997; Barrett, 2005; Barrett & Kurzban, 2006), the hypothesis has received its most comprehensive and sophisticated defense at the hands of Carruthers (2006). Before proceeding to the details of that defense, however, we need to consider briefly what concept of modularity is in play.

The main thing to note here is that the operative notion of modularity differs significantly from the traditional Fodorian one. Carruthers is explicit on this point:

[If] a thesis of massive mental modularity is to be remotely plausible, then by ‘module’ we cannot mean ‘Fodor-module’. In particular, the properties of having proprietary transducers, shallow outputs, fast processing, significant innateness or innate channeling, and encapsulation will very likely have to be struck out. That leaves us with the idea that modules might be isolable function-specific processing systems, all or almost all of which are domain specific (in the content sense), whose operations aren’t subject to the will, which are associated with specific neural structures (albeit sometimes spatially dispersed ones), and whose internal operations may be inaccessible to the remainder of cognition. (Carruthers, 2006, p. 12)

Of the original set of nine features associated with Fodor-modules, then, Carruthers-modules retain at most only five: dissociability, domain specificity, automaticity, neural localizability, and central inaccessibility. Conspicuously absent from the list is informational encapsulation, the feature most central to modularity in Fodor’s account. What’s more, Carruthers goes on to drop domain specificity, automaticity, and strong localizability (which rules out the sharing of parts between modules) from his initial list of five features, making his conception of modularity even more sparse (Carruthers, 2006, p. 62). Other proposals in the literature are similarly permissive in terms of the requirements a system must meet in order to count as modular; an extreme case is Barrett and Kurzban’s conception of modules as functionally specialized mechanisms (Barrett & Kurzban, 2006).

A second point, related to the first, is that defenders of massive modularity have chiefly been concerned to defend the modularity of central cognition, taking for granted that the mind is modular at the level of input systems. Thus, the hypothesis at issue for theorists like Carruthers might be best understood as the conjunction of two claims: first, that input systems are modular in a way that requires narrow-scope encapsulation; second, that central systems are modular, but only in a way that does not require this feature. In defending massive modularity, Carruthers focuses on the second of these claims, and so will we.

3.1 The case for massive modularity

The centerpiece of Carruthers (2006) consists of three arguments for massive modularity: the Argument from Design, the Argument from Animals, and the Argument from Computational Tractability. Let’s briefly consider each of them in turn.

The Argument from Design is as follows:

- Biological systems are designed systems, constructed incrementally.

- Such systems, when complex, need to be organized in a pervasively modular way, that is, as a hierarchical assembly of separately modifiable, functionally autonomous components.

- The human mind is a biological system, and is complex.

- Therefore, the human mind is (probably) massively modular in its organization. (Carruthers, 2006, p. 25)

The crux of this argument is the idea that complex biological systems cannot evolve unless they are organized in a modular way, where modular organization entails that each component of the system (that is, each module) can be selected for change independently of the others. In other words, the evolvability of the system as a whole requires the independent evolvability of its parts. The problem with this assumption is twofold (Woodward & Cowie, 2004). First, not all biological traits are independently modifiable. Having two lungs, for example, is a trait that cannot be changed without changing other traits of an organism, because the genetic and developmental mechanisms underlying lung numerosity causally depend on the genetic and developmental mechanisms underlying bilateral symmetry. Second, there appear to be developmental constraints on neurogenesis which rule out changing the size of one brain area independently of the others. This in turn suggests that natural selection cannot modify cognitive traits in isolation from one another, given that evolving the neural circuitry for one cognitive trait is likely to result in changes to the neural circuitry for other traits.

A further worry about the Argument from Design concerns the gap between its conclusion (the claim that the mind is massively modular in organization) and the hypothesis at issue (the claim that the mind is massively modular simpliciter). The worry is this. According to Carruthers, the modularity of a system implies the possession of just two properties: functional dissociability and inaccessibility of processing to external monitoring. Suppose that a system is massively modular in organization. It follows from the definition of modular organization that the components of the system are functionally autonomous and separately modifiable. Though functional autonomy guarantees dissociability, it’s not clear why separate modifiability guarantees inaccessibility to external monitoring. According to Carruthers, the reason is that “if the internal operations of a system (e.g., the details of the algorithm being executed) were available elsewhere, then they couldn’t be altered without some corresponding alteration being made in the system to which they are accessible” (Carruthers, 2006, p. 61). But this is a questionable assumption. On the contrary, it seems plausible that the internal operations of one system could be accessible to a second system in virtue of a monitoring mechanism that functions the same way regardless of the details of the processing being monitored. At a minimum, the claim that separate modifiability entails inaccessibility to external monitoring calls for more justification than Carruthers offers.

In short, the Argument from Design is susceptible to a number of objections. Fortunately, there’s a slightly stronger argument in the vicinity of this one, due to Cosmides and Tooby (1992). It goes like this:

- The human mind is a product of natural selection.

- In order to survive and reproduce, our human ancestors had to solve a number of recurrent adaptive problems (finding food, shelter, mates, etc.).

- Since adaptive problems are solved more quickly, efficiently, and reliably by modular systems than by non-modular ones, natural selection would have favored the evolution of a massively modular architecture.

- Therefore, the human mind is (probably) massively modular.

The force of this argument depends chiefly on the strength of the third premise. Not everyone is convinced, to put it mildly (Fodor, 2000; Samuels, 2000; Woodward & Cowie, 2004). First, the premise exemplifies adaptationist reasoning, and adaptationism in the philosophy of biology has more than its share of critics. Second, it is doubtful whether adaptive problem-solving in general is easier to accomplish with a large collection of specialized problem-solving devices than with a smaller collection of general problem-solving devices with access to a library of specialized programs (Samuels, 2000). Hence, insofar as the massive modularity hypothesis postulates an architecture of the first sort—as evolutionary psychologists’ ‘Swiss Army knife’ metaphor of the mind implies (Cosmides & Tooby, 1992)—the premise seems shaky.

A related argument is the Argument from Animals. Unlike the Argument from Design, this argument is never explicitly stated in Carruthers (2006). But here is a plausible reconstruction of it, due to Wilson (2008):

- Animal minds are massively modular.

- Human minds are incremental extensions of animal minds.

- Therefore, the human mind is (probably) massively modular.

Unfortunately for friends of massive modularity, this argument, like the argument from design, is vulnerable to a number of objections (Wilson, 2008). We’ll mention two of them here. First, it’s not easy to motivate the claim that animal minds are massively modular in the operative sense. Though Carruthers (2006) goes to heroic lengths to do so, the evidence he cites—e.g., for the domain specificity of animal learning mechanisms, à la Gallistel, 1990—adds up to less than what’s needed. The problem is that domain specificity is not sufficient for Carruthers-style modularity; indeed, it is not even one of the central characteristics of modularity in Carruthers’ account. So the argument falters at the first step. Second, even if animal minds are massively modular, and even if single incremental extensions of the animal mind preserve that feature, it’s quite possible that a series of such extensions of animal minds might have led to its loss. In other words, as Wilson (2008) puts it, it can’t be assumed that the conservation of massive modularity is transitive. And without this assumption, the Argument from Animals can’t go through.

Finally, we have the Argument from Computational Tractability (Carruthers, 2006, pp. 44–59). For the purposes of this argument, we assume that a mental process is computationally tractable if it can be specified at the algorithmic level in such a way that the execution of the process is feasible given time, energy, and other resource constraints on human cognition (Samuels, 2005). We also assume that a system is at wide-scope encapsulated if in the course of its operations the system lacks access to at least some information exogenous to it. (Recall from §2.2 that wide-scope encapsulation is entailed by narrow-scope encapsulation, but not conversely.)

- The mind is computationally realized.

- All computational mental processes must be tractable.

- Tractable processing is possible only in wide-scope encapsulated systems.

- Hence, the mind must consist entirely of wide-scope encapsulated systems.

- Hence, the mind is (probably) massively modular.

There are two problems with this argument, however. The first problem has to do with the third premise, which states that tractability requires encapsulation, that is, the inaccessibility of at least some exogenous information to processing. What tractability actually requires is something weaker, namely, that not all information is accessed by the mechanism in the course of its operations (Samuels, 2005). In other words, it is possible for a system to have unlimited access to a database without actually accessing all of its contents. Though tractable computation rules out exhaustive search, for example, unencapsulated mechanisms need not engage in exhaustive search, so tractability does not require wide-scope encapsulation. The second problem with the argument concerns the last step. Though one might reasonably suppose that modular systems must be wide-scope encapsulated, the converse doesn’t follow, so it’s unclear how one gets from a claim about pervasive wide-scope encapsulation to a claim about pervasive modularity.

All in all, then, compelling general arguments for massive modularity are hard to come by. This is not yet to dismiss the possibility of modularity in high-level cognition, but it invites skepticism, especially given the paucity of empirical evidence directly supporting the hypothesis (Robbins, 2013). For example, it has been suggested that the capacity to think about social exchanges is subserved by a domain-specific, functionally dissociable, and innate mechanism (Stone et al., 2002; Sugiyama et al., 2002). However, it appears that deficits in social exchange reasoning do not occur in isolation, but are accompanied by other social-cognitive impairments (Prinz, 2006). Skepticism about modularity in other areas of central cognition, such as high-level mindreading, also seems to be the order of the day (Currie & Sterelny, 2000). The type of mindreading impairments characteristic of Asperger syndrome and high-functioning autism, for example, co-occur with sensory processing and executive function deficits (Frith, 2003). In general, there is little in the way of neuropsychological evidence of high-level modularity.

3.2 The case against massive modularity

Just as there are general theoretical arguments for massive modularity, there are general theoretical arguments against it. One argument takes the form of what Fodor (2000) calls the “Input Problem.” The problem is this. Suppose that the architecture of the mind is modular from top to bottom, and the mind consists entirely of domain-specific mechanisms. In that case, the outputs of each low-level (input) system will need to be routed to the appropriately specialized high-level (central) system for processing. But that routing can only be accomplished by a domain-general, non-modular mechanism—contradicting the initial supposition. In response to this problem, Barrett (2005) argues that processing in a massively modular architecture does not require a domain-general routing device of the sort envisaged by Fodor. An alternative solution, Barrett suggests, involves what he calls “enzymatic computation.” In this model, low-level systems pool their outputs together in a centrally accessible workspace where each central system is selectively activated by outputs that match its domain, in much the same way that enzymes selectively bind with substrates that match their specific templates. Like enzymes, specialized computational devices at the central level of the architecture accept a restricted range of inputs (analogous to biochemical substrates), perform specialized operations on that input (analogous to biochemical reactions), and produce outputs in a format useable by other computational devices (analogous to biochemical products). This obviates the need for a domain-general (hence, non-modular) mechanism to mediate between low-level and high-level systems.

A second challenge to massive modularity is posed by the “Domain Integration Problem” (Carruthers, 2006). The problem here is that reasoning, planning, decision making, and other types of high-level cognition routinely involve the production of conceptually structured representations whose content crosses domains. This means that there must be some mechanism for integrating representations from multiple domains. But such a mechanism would be domain general rather than domain specific, and hence, non-modular. Like the Input Problem, however, the Domain Integration Problem is not insurmountable. One possible solution is that the language system has the capacity to play the role of content integrator in virtue of its capacity to transform conceptual representations that have been linguistically encoded (Hermer & Spelke, 1996; Carruthers, 2002, 2006). On this view, language is the vehicle of domain-general thought. (For doubts about the viability of this proposal, see Rice (2011) and Robbins (2002).)

Empirical objections to massive modularity take a variety of forms. To start with, there is neurobiological evidence of developmental plasticity, a phenomenon that tells against the idea that brain structure is innately specified (Buller, 2005; Buller & Hardcastle, 2000). However, not all proponents of massive modularity insist that modules are innately specified (Carruthers, 2006; Kurzban, Tooby, & Cosmides, 2001). Furthermore, it’s unclear to what extent the neurobiological record is at odds with nativism, given the evidence that specific genes are linked to the normal development of cortical structures in both humans and animals (Machery & Barrett, 2008; Ramus, 2006).

Another source of evidence against massive modularity comes from research on individual differences in high-level cognition (Rabaglia, Marcus, & Lane, 2011). Such differences tend to be strongly positively correlated across domains—a phenomenon known as the “positive manifold”—suggesting that high-level cognitive abilities are subserved by a domain-general mechanism, rather than by a suite of specialized modules. There is, however, an alternative explanation of the positive manifold. Since post-Fodorian modules are allowed to share parts (Carruthers, 2006), the correlations observed may stem from individual differences in the functioning of components spanning multiple domain-specific mechanisms.

3.3. Doubts about the debate

As noted earlier, proponents of the massive modularity hypothesis argue that the architecture of the mind is modular through and through. Opponents of massive modularity, on the other hand, argue that at least some components of mental architecture are not modular. Meaningful disagreement about the extent to which the mind is modular, however, can only take place against a background of agreement about what it means for a component of the mind to be modular. And given the lack of consensus in the cognitive science literature about what features are criterial of modularity, one might worry that partisans in the debate over massive modularity are talking past each other.

A version of this worry animates a recent critique of the massive modularity debate, according to which the controversy stems from a confusion between two levels of analysis: a functional level and an intentional level (Pietraszewski & Wertz, 2022). (While Pietraszewski and Wertz describe these as different levels of analysis in the sense of Marr (1982), their exposition also draws on Dennett’s (1987) idea of stances, which are better understood as strategies for prediction and explanation.) The crux of Pietraszewski and Wertz’s critique is as follows. At a functional level of analysis, modules are functionally specialized mechanisms. Since proponents of massive modularity operate at this level of analysis, they understand modularity in terms of functional specialization (Barrett & Kurzban, 2006). At an intentional level of analysis, by contrast, modules are parts of the mind that operate outside the sphere of influence of a central agency. At this level of analysis, the essence of modularity is informational encapsulation, a property that exists only at the intentional level. (The logic behind this restriction is that informational encapsulation entails independence from a central agency, and the concept of a central agency is meaningful only at an intentional level.) Since opponents of massive modularity operate at an intentional level of analysis, they understand modularity primarily in terms of informational encapsulation (Fodor, 1984, 2000). According to Pietraszewski and Wertz, the debate over massive modularity persists because proponents and opponents of massive modularity are operating at different levels of analysis (functional and intentional, respectively), and these different levels of analysis presuppose fundamentally different criteria of modularity (functional specialization and informational encapsulation, respectively).

Responding to this critique, Egeland (2024) takes issue with Pietraszewski and Wertz’s characterization of the massive modularity debate (Egeland, 2024). For starters, Egeland contests their claim that partisans on the affirmative side of the debate typically conceive of modules as nothing more than functionally specialized mechanisms. In fact, he says, most proponents of massive modularity adopt a thicker conception of modularity, according to which modules are both functionally specialized and domain specific (Boyer & Barrett, 2015; Coltheart, 1999; Cosmides & Tooby, 1994; Sperber, 2002; Villena, 2023; Zerilli, 2017). As for the negative side of the debate, Egeland argues against the idea that opponents of massive modularity always conceive of modules as informationally encapsulated, noting that some of them regard domain specificity, rather than informational encapsulation, as the principal criterion of modularity (Bolhuis & Macphail, 2001; Chiappe & Gardner, 2012; Reader et al., 2011). Based on these considerations, Egeland concludes that Pietraszewski and Wertz’s dismissal of the massive modularity debate as the product of conceptual error is both unwarranted and counterproductive, insofar as it glosses over important questions about the extent to which the architecture of the mind is composed of domain-specific mechanisms (Margolis & Laurence, 2023).

4. Modularity and the border between perception and cognition

The hypothesis of modest modularity (§2) says that input systems are modular while central systems are non-modular. This section explores how philosophers have drawn on the hypothesis of modest modularity to either endorse or oppose the idea of a scientifically grounded distinction between perception and cognition.

That there is a common-sense distinction between seeing and believing is not in doubt. It is debatable, however, whether this commonsense distinction tracks a joint in nature: is there a border between perception and cognition that can be captured by the sciences of the mind? One way to defend the existence of such a border is to appeal to the hypothesis of modest modularity. If perception is modular and cognition is non-modular, then we can give an account of the border in terms of the brain’s information-processing architecture. This view is discussed in §4.1.

On certain ways of characterizing the information-processing architecture of the brain, however, it is unclear whether we can make sense of a border between perception and cognition. Proponents of predictive processing architectures, for example, emphasize the continuity between cognition and perception, and the ubiquity of top-down influences on the processing of sensory information. This leads some theorists to deny the existence of an architectural border between perception and cognition on the grounds that perception cannot be modular. Their arguments are considered in §4.2.

4.1 Characterizing the perception–cognition debate via modularity

The idea that there is an architectural border between perception and cognition takes its inspiration from the work of Fodor and Pylyshyn, although it is important to notice that neither makes this exact claim. Fodor’s (1983) architectural distinction is between two families of systems: modular systems performing input analysis, which constitute a natural kind, and non-modular central systems, which exploit the information provided by these input systems. Fodorian input systems include linguistic processing as well as perceptual processing, and Fodorian central systems are engaged in the rational fixation of belief by non-demonstrative inference rather than cognition as it more broadly understood by cognitive psychology. Pylyshyn’s (1999) interest is specifically in the cognitive impenetrability of early visual processing rather than perception more generally, and he avoids using the term ‘modular’ to refer to cognitively impenetrable systems because he thinks it conflates several independent concepts. Technically, therefore, neither Fodor nor Pylyshyn is attempting to characterize a border between perception and cognition as they are standardly understood by the mind-sciences. Contemporary proponents of the architectural approach to the perception–cognition border draw on the insights of Fodor and Pylyshyn to defend the claim that that there is a joint in nature that constitutes a border between perception and cognition, and that this border exists because perception is modular and cognition is non-modular. Versions of this claim are put forward by Firestone and Scholl (2016), Mandelbaum (2017), Green (2020), Quilty-Dunn (2020), and Clarke (2021).

Some defenders of the architectural approach to the perception–cognition border remain committed to the idea that modularity is incompatible with cognitive penetration. Firestone and Scholl, for example, claim that the nature of the joint between perception and cognition is “such that perception proceeds without any direct, unmediated influence from cognition” (Firestone & Scholl, 2016, p.17). They propose that the standard purported examples of cognitive penetration are based on misinterpretations of the empirical data. Similarly, Quilty-Dunn (2020) also takes seriously the idea that perception is modular because it relies on the cognitive impenetrability of stores of proprietary information, and this is what makes it distinct from cognition. He allows that perception is influenced by the effects of attention but denies that such influences violate the cognitive impenetrability of perception.

Some defenders of the architectural joint between perception and cognition, however, defend a version of the modularity of perception on which it is compatible with perception being cognitively penetrated. Green’s (2020) “dimension restriction hypothesis” is an updated take on the modularity of perception, on which perceptual processes are constrained in a way that cognitive processes are not: the cognitive penetration of perception can occur, but only within strict limits. Green (2020) understands perception and cognition as separate psychological systems with restricted patterns of information flow between them. Clarke (2021) proposes that the joints between perceptual modules within a perceptual modality can be modified by cognitive processing without challenging the modularity of perception. He suggests that mental imagery, for example, can influence the inputs to a perceptual module via the visual buffer without altering the module’s proprietary database.

One reason to prefer stronger formulations of modularity, on which it is incompatible with cognitive penetration, concerns the tractability of perceptual processing. It is sometimes proposed that perceptual processing would be computationally intractable if it were cognitive penetrable. Green (2020) proposes that his dimensionally restricted account of modularity is consistent with the tractability constraint due to its strict limitations on information flow between perceptual and cognitive systems, and Clarke (2021) proposes that as long as no cognitive information has been added to a perceptual module’s proprietary database, worries about intractability do not arise. Brooke-Wilson (2023) denies that tractability concerns motivate informational encapsulation in the first place: he argues that informational encapsulation is neither necessary nor sufficient for tractability, and thus that informational encapsulation is less important than many have thought. If we reject informational encapsulation as an essential feature of modularity, however, it becomes more difficult to use modularity to characterize an architectural distinction between perception and cognition, since some paradigmatic cognitive processes may end up on the modular side of the distinction rather than the non-modular side (Clarke & Beck, 2023).

It is possible to defend the claim that there is a joint in nature between perception and cognition while denying that the correct way to characterize the joint is by appealing to modularity. One way to do this is by focusing instead on the difference in format between perceptual representations and cognitive representations. Block (2023), for example, argues that perceptual representations are nonpropositional, nonconceptual, and iconic, while cognitive representations are propositional, conceptual, and discursive. Characterizing the perception–cognition border in terms of representational format is not necessarily in tension with an architectural view of the border in terms of modularity. Those who take the perception–cognition border to be identifiable via representational format also tend to be sympathetic to modularity (e.g., Burnston, 2017). Block (2023) proposes that there is substantial truth to the modularity hypothesis, although he thinks that the border between perception and cognition is best captured in terms of representational format.

Another way to defend the claim that there is a perception–cognition border without relying on modularity is to distinguish between mental states that depend on proximal stimulation and those that do not. Phillips (2017) and Beck (2018) propose that perception is stimulus-dependent while cognition is stimulus-independent, for example. For further discussion of different ways to defend the idea of a border between perception and cognition, see the overviews by Watzl, Sundberg, and Nes (2021) and Clarke and Beck (2023).

4.2 Challenging the perception–cognition border via modularity

The claim that there is a perception–cognition boundary has come into question (Shea, 2014). One way to do this is to appeal to empirical evidence of cognitive penetration, infer that perception is not informationally encapsulated, and conclude that perception is not modular. This section focuses on an alternative way to challenge the, modularity of perception, which involves arguing that our best theories of mental architecture rule out modularity in principle. This strategy is primarily associated with ‘neural reuse’ architectures and ‘predictive processing’ architectures.

Proponents of neural reuse architectures emphasize the extent to which neural mechanisms which originally evolved or developed for one cognitive function can be deployed to service different cognitive functions (Anderson, 2010; Hurley, 2008). Zerilli (2020) argues that the only kinds of dissociable units we encounter in the brain have little in common with the modules posited by psychology and cognitive science, and thus that neural reuse architectures have disruptive implications for the forms of functional modularity adopted by Fodor or Carruthers. While it is true that neural reuse theories suggest that the same individual brain regions can implement multiple functional modules, this is a challenge primarily to anatomical modularity in the form of neural localizability (see §1). Anderson (2010) proposes that the sort of functional modularity characterized by domain specificity and informational encapsulation does not require anatomical modularity, and thus that the lack of anatomical modularity in neural reuse architectures does not provide a straightforward challenge to the modularity of mind.

Proponents of predictive processing architectures claim that the brain is a hierarchically structured engine of top-down prediction-error minimization, in which higher-level generative models predict the information in lower-level generative models (Clark, 2013; Hohwy, 2013). At the lowest level, the generative model is predicting the sensory input. The only information passed from lower to higher levels in hierarchy is in the form of prediction errors that capture how the actual input to each layer or model differs from the predicted input. Higher-level models provide Bayesian priors to lower-level models and are updated by prediction errors at lower levels in accordance with Bayes’ rule. Such a heavily top-down architecture, in which there is “no theoretical or anatomical border preventing top-down projections from high to low levels of the perceptual hierarchy” (Hohwy, 2013, p.122), seems liable to cognitive penetration. Vetter and Newen (2014), Lupyan (2015), and Cermeño-Aínsa (2021) argue that the top-down nature of predictive processing architectures must lead to cognitive penetration, and Vance and Stokes (2017) take this as evidence that such architectures are necessarily non-modular. Clark (2013) concurs, holding that that according to the predictive processing approach, perception is theory-laden and knowledge-driven; he proposes that “[t]o perceive the world just is to use what you know to explain away the sensory signal” (Clark, 2013, p. 190).

These concerns lead Clark (2013) to doubt whether there is a border between perception and cognition. He proposes that predictive architecture “makes the lines between perception and cognition fuzzy, perhaps even vanishing” (Clark, 2013, p. 190) and suggests that perception and cognition are “profoundly unified and, in important respects, continuous” (Clark, 2013, p. 187). Philosophers have responded to these concerns by arguing that while predictive processing architectures may allow cognitive penetration, they don’t necessitate it (Macpherson, 2015). Hohwy (2013) suggests that the top-down processing associated with predictive architectures builds in a certain kind of evidential insulation, such that cognitive penetration will occur only under conditions of noisy input or uncertainty. Drayson (2017) argues that the probabilistic causal influence from one level in the hierarchy to the level below need not be transitive, and so we can accept that each level in the predictive hierarchy is causally influenced by the level above it without having to accept that each level is causally influenced by all the levels above it. In addition to arguing that predictive processing architectures do not necessitate the cognitive penetration of perception, some philosophers go so far as to claim that predictive processing architectures do in fact exhibit modularity (Beni, 2022; Burnston, 2021; Drayson, 2017).

Even if Clark is right that predictive processing architectures do not allow for modularity, however, it would not follow from this that there is no distinction between perception and cognition. Clark’s claim that predictive processing architectures provide “a genuine departure from many of our previous ways of thinking about perception, cognition, and the human cognitive architecture” (Clark, 2013, 187) seems to rely on the idea that modularity is the default view of perception. This position is challenged at length by Stokes (2021). Furthermore, modularity is not the only candidate for drawing a joint in nature between perception and cognition: see the alternative approaches to the perception–cognition border discussed in §4.1.

4.3 Modularity beyond perception and cognition

Discussions of modularity in philosophy and cognitive science tend to focus on two aspects of the mind: sensing and perceiving the world (e.g., vision, audition, olfaction), and thinking about the world (e.g., reasoning, planning, decision making). As important and fundamental as these capacities are, they do not exhaust the psychological domain, which also includes affective capacities (e.g., pain) and agentive capacities (e.g., motor control). Though the modularity of these further capacities has been much less well investigated, a growing literature in this area testifies to the importance of modularity beyond the perception–cognition divide.

Regarding the pain system, the received view, at least until recently, has been that pain is not modular. This hypothesis is based primarily on evidence that pain is cognitively penetrable, such as the apparent modulation of pain by expectations (Gligorov, 2017) and the phenomenon of placebo analgesia (Shevlin & Friesen, 2021). Together with the assumption that cognitive penetrability precludes informational encapsulation, and the assumption that modularity requires information encapsulation, this evidence suggests that the pain system is non-modular. Pushing back against this consensus, Casser and Clarke (2023) argue that evidence for the cognitive penetrability of pain is inconclusive. They also argue that evidence for the judgment independence of pain from studies of the Thermal Grill Illusion, for example—which militates against cognitive penetrability and in favor of informational encapsulation, hence in favor of modularity—is robust. But even if Casser and Clarke’s argument goes through, there may be other ways to motivate the conclusion that pain is not modular. For example, Skrzypulec (2023) argues that central cognitive mechanisms are partly constitutive of the pain system, which in turn suggests that pain may not be modular after all. In short, it appears that on the question of whether the pain system is modular, the jury is still out.

As for the modularity of motor control, Mylopoulos (2021) argues that despite being cognitively penetrable, the motor system is informationally encapsulated, hence modular in the Fodorian sense. On her view, motor control is (a) cognitively penetrable because it takes states of central cognition (i.e., intentions or action plans) as inputs, and (b) informationally encapsulated because in processing its inputs it accesses only motor schemas stored in a proprietary database and sensory information selected for by attention. There are two problems with this argument, however. First, the fact that a system takes states of central cognition as inputs does not show that in processing its inputs, the system is directly causally sensitive to states of central cognition. (Recall the distinction between information drawn on by a system in the course of its operations, on the one hand, and inputs to the system carrying out those operations, on the other; see §1 above.) Hence, even if the motor system took intentions as inputs, that would not show that the system is cognitively penetrable, except in a trivial sense. Second, and more importantly, information encapsulation requires that when computing an input–output function, a system draws only on information contained in its inputs and information stored in a proprietary database, and nothing else. But on Mylopoulos’s proposal, the motor system does not meet this requirement. As a result, the case she makes for the modularity of motor control is less than compelling.

5. Modularity and philosophy

Interest in modularity is not confined to cognitive science and the philosophy of mind; it extends well into a number of allied fields. In epistemology, modularity has been invoked to defend the legitimacy of a theory-neutral type of observation, and hence the possibility of some degree of consensus among scientists with divergent theoretical commitments (Fodor, 1984). The ensuing debate on this issue (Churchland, 1988; Fodor, 1988; McCauley & Henrich, 2006) holds lasting significance for the general philosophy of science, particularly for controversies regarding the status of scientific realism. Relatedly, evidence of the cognitive penetrability of perception has given rise to worries about the justification of perceptual beliefs (Siegel, 2012; Stokes, 2012). In ethics, evidence of this sort has been used to cast doubt on ethical intuitionism as an account of moral epistemology (Cowan, 2014). In philosophy of language, modularity has figured in theorizing about linguistic communication, for example, in relevance theorists’ suggestion that speech interpretation, pragmatic warts and all, is a modular process (Sperber & Wilson, 2002). It has also been used demarcate the boundary between semantics and pragmatics, and to defend a strikingly austere version of semantic minimalism (Borg, 2004). Though the success of these deployments of modularity theory is subject to dispute (e.g., see Robbins, 2007, for doubts about the modularity of semantics), their existence testifies to the relevance of the concept of modularity to philosophical inquiry in a variety of domains.

Bibliography

- Anderson, M. L., 2010. Neural reuse: A fundamental organizational principle of the brain. Behavioral and Brain Sciences, 33: 245–313.

- –––, 2014. After phrenology: Neural reuse and the interactive brain, Cambridge, MA: MIT Press.

- Antony, L. M., 2003. Rabbit-pots and supernovas: On the relevance of psychological data to linguistic theory. In A. Barber (ed.), Epistemology of Language, Oxford, UK: Oxford University Press, pp. 47–68.

- Arbib, M., 1987. Modularity and interaction of brain regions underlying visuomotor coordination. In J. L. Garfield (ed.), Modularity in Knowledge Representation and Natural-Language Understanding, Cambridge, MA: MIT Press, pp. 333–363.

- Ariew, A., 1999. Innateness is canalization: In defense of a developmental account of innateness. In V. G. Hardcastle (ed.), Where Biology Meets Psychology, Cambridge, MA: MIT Press, pp. 117–138.

- Balcetis, E. and Dunning, D., 2006. See what you want to see: Motivational influences on visual perception. Journal of Personality and Social Psychology, 91: 612–625.

- –––, 2010. Wishful seeing: More desired objects are seen as closer. Psychological Science, 21: 147–152.

- Bargh, J. A. and Chartrand, T. L., 1999. The unbearable automaticity of being. American Psychologist, 54: 462–479.

- Barrett, H. C., 2005. Enzymatic computation and cognitive modularity. Mind & Language, 20: 259–287.

- Barrett, H. C. and Kurzban, R., 2006. Modularity in cognition: Framing the debate. Psychological Review, 113: 628–647.

- Beck, J., 2018. Marking the perception–cognition boundary: The criterion of stimulus-dependence. Australasian Journal of Philosophy, 96: 319–334.

- Beni, M. D., 2022. A tale of two architectures. Consciousness and Cognition, 98 (C): 103257.

- Block, N., 2023. The Border Between Seeing and Thinking, New York, NY: Oxford University Press.

- Bolhuis, J. J. and Macphail, E. M., 2001. A critique of the neuroecology of learning and memory. Trends in Cognitive Sciences, 5: 426–433.

- Borg, E., 2004. Minimal Semantics, Oxford, UK: Oxford University Press.

- Boyer, P. and Barrett, H. C., 2015. Intuitive ontologies and domain specificity. In D. M. Buss (ed.), Handbook of Evolutionary Psychology, Hoboken, NJ: Wiley, pp. 1–19.

- Brooke-Wilson, T., 2023. How is perception tractable? Philosophical Review, 132: 239–292.

- Bruner, J. and Goodman, C. C., 1947. Value and need as organizing factors in perception. Journal of Abnormal and Social Psychology, 42: 33–44.

- Buller, D., 2005. Adapting Minds, Cambridge, MA: MIT Press.

- Buller, D. and Hardcastle, V. G., 2000. Evolutionary psychology, meet developmental neurobiology: Against promiscuous modularity. Brain and Mind, 1: 302–325.

- Burnston, D. C., 2017. Cognitive penetration and the cognition–perception interface. Synthese, 194: 3645–3668.

- –––, 2021. Bayes, predictive processing, and the cognitive architecture of motor control. Consciousness and Cognition, 96 (C): 103218.

- Carruthers, P., 2002. The cognitive functions of language. Behavioral and Brain Sciences, 25: 657–725.

- –––, 2006. The Architecture of the Mind, Oxford, UK: Oxford University Press.

- Casser, L. and Clarke, S., 2023. Is pain modular? Mind & Language, 38: 828–846.

- Cermeño-Aínsa, S., 2021. Predictive coding and the strong thesis of cognitive penetrability. Theoria, 36: 341–360.